0x0000000 accounts are so last season. Now it’s all about vanity tx prefixes. In this blog post, we will learn how to impress your Etherscan followers and fry a few CPU cores along the way.

Vanity txs

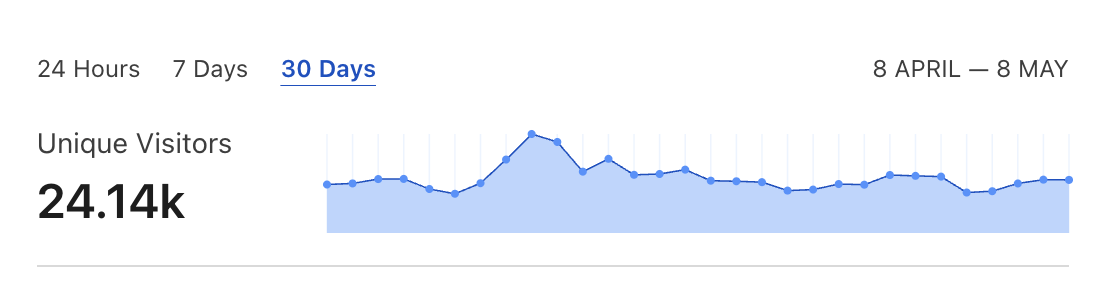

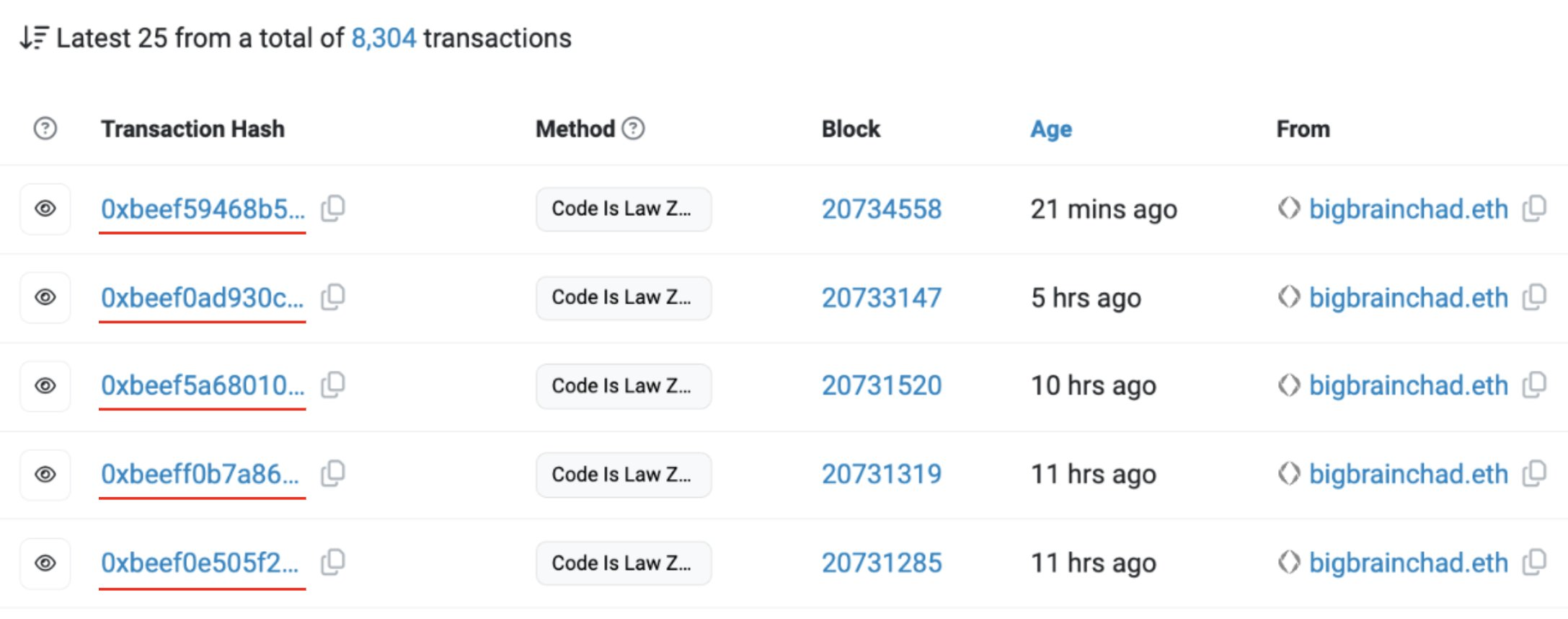

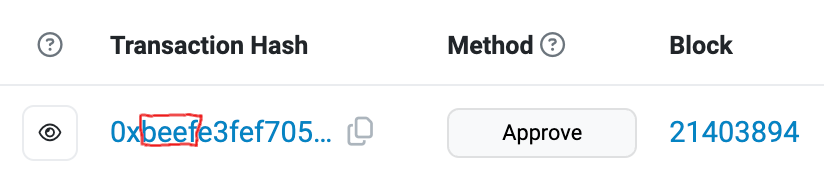

It all started from this X thread. Apparently, there’s a bigbrainchad.eth bot, that extracts the MEV in style. All his txs hashes start with the 0xbeef prefix:

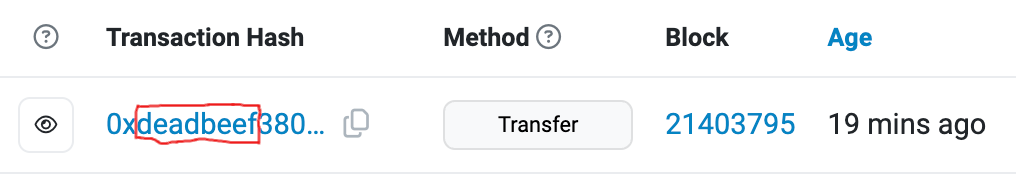

I immediately got jealous and also wanted to send a few cool-looking txs. Hence the birth of alloy-deadbeef lib, and soon my vanity tx landed on the mainnet:

Read on to learn about the lib’s implementation details and performance overhead of sending such fancy txs.

How to brute-force ETH tx prefix?

You can use alloy-deadbeef as an alloy filler:

let provider = ProviderBuilder::new()

.filler(DeadbeefFiller::new(

"beef".to_string(),

wallet.clone(),

)

.wallet(wallet)

.on_http(endpoint().parse()?);

All the txs sent from this provider will land with a 0xbeef prefix.

Or generate a tx object that will commit to the chain with the desired prefix (unless you change any of its data!):

let tx = TransactionRequest {

from: Some(account),

to: Some(account.into()),

..Default::default()

};

let deadbeef = DeadbeefFiller::new(

"beef".to_string(),

wallet

);

let prefixed_tx = deadbeef.prefixed_tx(tx);

The lib brute forces a matching hash prefix, by incrementing the transaction gas_limit or value attribute. More details on that later.

We’re working with 16^n complexity here. Even Rust takes a while to crunch these numbers. To optimize performance, the lib is maxing out parallelism by spanning tokio task for each CPU available. The core loop looks like this:

let mut buf = Vec::with_capacity(200);

let result: Option<U256> = loop {

select! {

biased;

_ = done.recv() => {

break None;

}

_ = futures::future::ready(()) => {

let tx = tx.clone();

let next_value = tx.value.unwrap_or_default() + U256::from(value);

let tx_hash = tx_hash_for_value(tx, &wallet, next_value, &mut buf).await?;

value += 1;

let hash_str = format!("{:x}", &tx_hash);

if hash_str.starts_with(&prefix) {

let iters = value - starting_input;

let total_iters = max_cores * iters;

info!("Found matching tx hash: {tx_hash} after ~{total_iters} iterations");

break Some(next_value);

}

}

}

};

async fn tx_hash_for_value(

tx: TransactionRequest,

wallet: &EthereumWallet,

value: U256,

buf: &mut Vec<u8>,

) -> Result<FixedBytes<32>> {

let mut tx = tx;

buf.clear();

tx.value = Some(value);

let tx_envelope = tx.build(&wallet).await?;

tx_envelope.encode_2718(buf);

let tx_hash = keccak256(&buf);

Ok(tx_hash)

}

Tx hash is generated by running keccak256 on a signed transaction body, which is why we need to provide an EthereumWallet object. We use a select! macro to kill the remaining loops when the correct prefix is found.

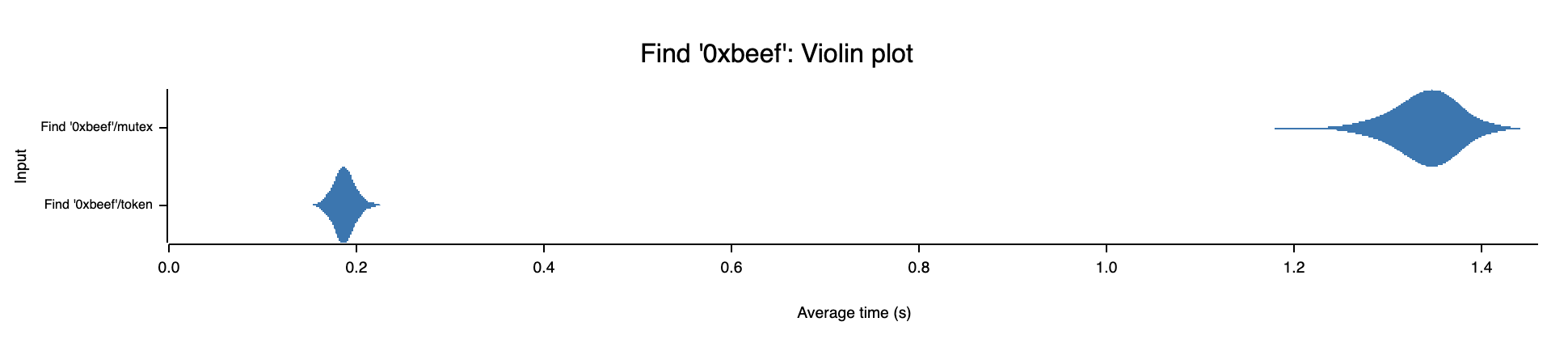

Initially, I’ve used Arc<Mutex<bool>> like this:

let is_done = done.lock().await;

if *is_done {

break None;

}

drop(is_done);

But multiple threads competing for the mutex lock on each loop did wreck the performance. It was ~6x slower than tokio CancellationToken:

In the end, I’ve switched to tokio::sync::broadcast::channel. Contrary to CancellationToken, it does not require a separate crate, and is just as fast.

Another interesting discovery was that tokio::time::sleep(Duration::from_millis(0)) has a delay of ~1ms. It killed the performance until I replaced it with futures::future::ready(()), which is instant.

Benchmarking tx prefix generation

I wanted to include a benchmark showing how long on avg. it takes to find a matching prefix. But I’ve found out it’s very random. 0xbeef can take anywhere between 200ms and 900ms, depending on the rest of the transaction attributes. Instead, I’ve AI-ed my way to finding how many iterations are needed to produce the desired prefix with 99% probability:

| Length | Probability (1 in) | Iterations for ~99% certainty |

|---|---|---|

| 1 | 1/16 | ~72 |

| 2 | 1/256 | ~1,180 |

| 3 | 1/4,096 | ~18,900 |

| 4 | 1/65,536 | ~302,000 |

| 5 | 1/1,048,576 | ~4,830,000 |

| 6 | 1/16,777,216 | ~77,900,000 |

| 7 | 1/268,435,456 | ~1,240,000,000 |

| 8 | 1/4,294,967,296 | ~19,800,000,000 |

| 9 | 1/68,719,476,736 | ~316,000,000,000 |

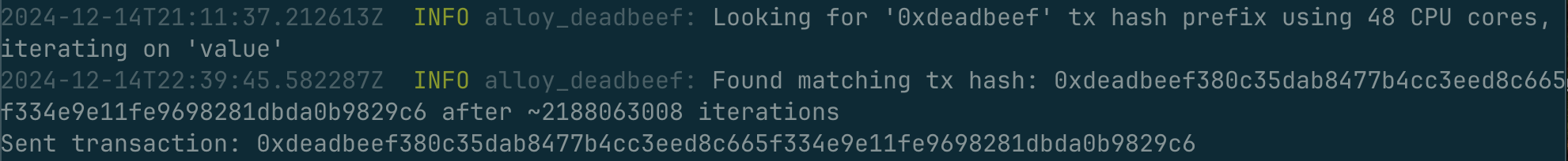

An interesting takeaway is that increasing the gas limit by up to 300,000 should not cause any issues with landing the transaction on-chain. The current implementation of alloy-deadbeef iterates on gas_limit instead of value for prefixes up to 4 characters. It means that we can also flex for non-payable transactions:

Alternatively, you can force a specific iteration mode like this:

let mut deadbeef = DeadbeefFiller::new("beef".to_string(), wallet)?;

deadbeef.set_iteration_mode(IterationMode::Value);

Let’s now analyze the maximum processing time. Assume we’re using a computer with 4 CPU cores and searching for 4-character prefix. Each loop has to process a maximum of 302,000 / 4 i.e. 75,500 iterations. So iteration starts from the following values for each of the loops:

- 0 - 0

- 1 - 75500

- 2 - 151000

- 3 - 256500

The table contains info on what’s the maximum processing time on my MBP M2 and beefed out 48 vCPUs Hetzner:

| Length | MBP M2 12 CPUs | Hetzner VPS 48 vCPUs |

|---|---|---|

| 1 | 547.58µs | 397.69µs |

| 2 | 5.81ms | 3.18ms |

| 3 | 123.86ms | 45.38ms |

| 4 | 1.82s | 755.83ms |

| 5 | 29.62s | 12.08s |

| 6 | ~8 minutes* | ~3 minutes |

| 7 | ~128 minutes* | ~48 minutes* |

| 8 | ~34 hours* | ~13 hours* |

| 9 | ~23 days* | ~8 days* |

* extrapolated

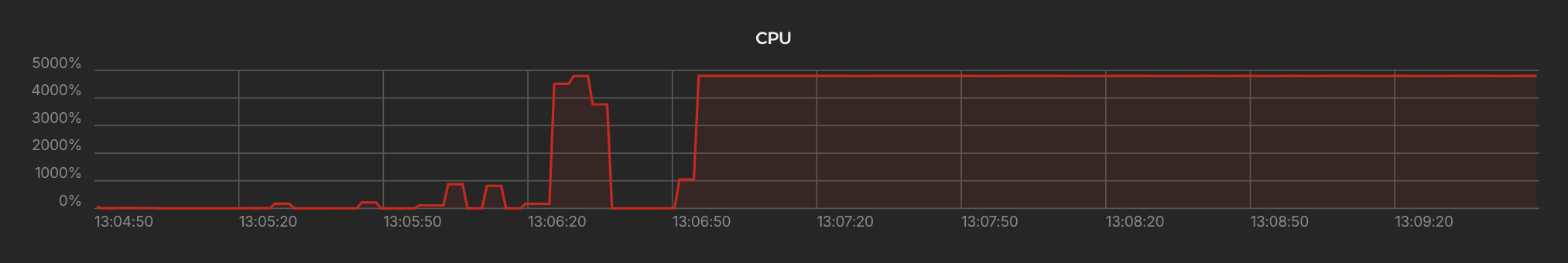

0xdeadbeefYou can see that each additional prefix length increases processing time by ~16x, just as we’ve calculated. But these are the worst-case numbers.

My 0xdeadbeef tx in practice took ~1.5 hour and slightly over 2 billion iterations to generate. According to my AI friends, the probability of this happening was at ~37%.

Summary

Our research shows that it is possible to efficiently use up to 4 character-long prefixes. It could be a simple way to intimidate your MEV competition and assert alpha bot dominance. alloy-deadbeef was a fun weekend project. I’d love to learn how to optimize it further, so please send over any suggestions/PRs.