Rails 7 introduces ActiveRecord load_async method that runs SQL queries asynchronously in the background thread. This seemingly simple change of just adding a single new method that takes no arguments has profound implications for database layer interactions. In this tutorial, we’ll deep dive into the intricacies of this new load async API. We’ll discuss lazy-loaded queries, Ruby threading model, blocking IO, database pool vs. max connections limit, and performance impact of concurrent database clients. I’ll also try to suggest scenarios where introducing async SQL queries could have the most benefit without sacrificing the stability of your Rails app.

We have a lot of ground to cover, so let’s get started!

[Update] After the publication, the author of the initial load_async implementation (casperisfine on GitHub) responded on Reddit with a few technical corrections. The blog post has been updated accordingly.

ActiveRecord load_async 101

It is common for Rails controller action to trigger multiple queries for different models:

class PagesController < ApplicationController

def home

@users = User.slow

@comments = Comment.slow

@posts = Post.slow

end

endand later display data in the view like that:

<ul>

<%- @users.each do -%>

<li><%= user.email %></li>

<%- end -%>

</ul>We’re using a slow scope method with the following implementation:

scope :slow, -> {

where("SELECT true FROM pg_sleep(1)").limit(10)

}The slow scope returns ten rows from a table after an artificial one-second delay. This approach will allow us to deterministically measure the performance impact of adding load_async without populating a database with thousands of records.

Executing this controller action should print out similar server logs:

User Load (1006.0ms) SELECT "users".* FROM "users" ...

Comment Load (1003.2ms) SELECT "comments".* FROM "comments" ...

Post Load (1041.2ms) SELECT "posts".* FROM "posts" ...

Completed 200 OK in 3204ms (Views: 51.2ms ...)We can see that queries were executed sequentially, resulting in a response time of over 3 seconds. Most of the time was spent in the ActiveRecord layer. Let’s now see how using load_async can speed things up.

Optimizing response time with asynchronous SQL

We can rewrite our controller to leverage the new API:

class PagesController < ApplicationController

def home

@users = User.slow.load_async

@comments = Comment.slow.load_async

@posts = Post.slow.load_async

end

endMake sure to enable asynchronous execution in your environment config file:

config/environments/development.rb

config.active_record.async_query_executor = :global_thread_pooland now we should get the following logs:

User Load (1008.7ms) ...

ASYNC Comment Load (62.1ms) (db time 1013.8ms) ...

ASYNC Post Load (0.0ms) (db time 1010.5ms) ...

Completed 200 OK in 1085ms (Views: 64.4ms ...)It looks like we’re down to one second response time, ~3x improvement. Does it mean that Rails does scale? Just update to version 7 and sprinkle load_async all over the codebase!

Not really… Overusing the new load_async API to magically improve response times is likely to crash your app and slow the database to a crawl.

To understand why we’ll need to take a step back and first recap Ruby threads and ActiveRecord basics. But you’ve been warned that this is a deep dive…

You can also skip the basics and go straight to the section describing load_async by clicking here.

Ruby threads in a nutshell

This blog post is by no means trying to be a comprehensive introduction to the Ruby threading model. I’ll focus on the basics relevant to understanding the inner workings of load_async.

Ruby supports parallel execution for so-called blocking I/O. Any operation that does not directly use the CPU cycles from its thread but instead delegates the work to external processes is a blocking I/O. Typical examples in the context of Ruby on Rails web apps are SQL database queries, reading/writing to files, or HTTP requests. On the contrary, you cannot speed up, e.g., generation of md5 hashes using Ruby multithreading because it is a CPU-bound operation. I’ve covered Ruby threading, GIL, and blocking I/O in much more depth in my other blog post.

Here’s a basic example of doing a blocking IO inside a separate thread:

t1 = Thread.new { sleep 2 }

sleep 2

t1.joinIn this case, sleep is our work, our blocking IO. If you copy-paste the above snippet into the Ruby IRB, it will freeze for 2 seconds. It means that we managed to do 4 seconds of sleeping in 2 seconds because our work was parallelized using a separate thread. While sleeping might not seem like the most useful thing to do, you could replace sleep with an SQL or HTTP call and get a similar result.

Let’s see another example:

t1 = Thread.new { sleep 3 }

sleep 2

t1.joinRunning it would freeze IRB for 3 seconds. We tried to join our t1 thread into the main thread before it completed its execution. That’s why it took one more second of the main thread execution time.

So, the key takeaways here are that blocking IO can be parallelized using a separate Ruby thread, and if it is joined into the main thread while still running, it will block until execution completes.

Let’s now move on to the ActiveRecord chunk of our load_async puzzle.

ActiveRecord lazy-loaded queries

To better explain how load_async works, we’ll now discuss ActiveRecord lazy loading behavior. It is critical to understand that running:

@users = User.slowdoes not execute an SQL query by itself but only creates an ActiveRecord_Relation object. The actual SQL is only triggered when necessary. This behavior was introduced in Rails 3.

Lazy loading allows for many cool features like merging scopes and passing ActiveRecord query objects around without running the SQL before the results are needed.

You can try it yourself in the console. First make sure to enable ActiveRecord STDOUT logging:

ActiveRecord::Base.logger = Logger.new(STDOUT)and now run:

@users = User.slowYou should see a similar output after a one-second delay:

User Load (1006.2ms)So it looks like the query DID run. But did not I just tell you that it wouldn’t? In this case, the Ruby IRB is to blame. By default, it prints the result of the last command, effectively executing the query. You can circumvent this behavior by running:

@users = User.slow; niland now the command executes instantly, and you should not see the SQL log anymore. By appending nil as output for IRB to display, we prevented query execution. Only trying to display the contents of @users would trigger the database interaction and take around a second to run.

Alternatively, you can force a query to load eagerly by appending to_a:

@users = User.slow.to_a; nilYou can easily check if relation object has already been loaded by reading its loaded? attribute:

@lazy_users = User.slow; nil

@lazy_users.loaded? # => false

@loaded_users = User.slow.to_a

@loaded_users.loaded? # => trueAsynchronous ActiveRecord queries under the microscope

We’ve now covered the basics necessary to understand load_async. Let’s finally move on to explaining the method itself. First try to trigger asynchronous query in the Rails console:

@users = User.slow.load_asyncAfter a one-second delay result of the query is printed out, and logs show:

User Load (1001.2ms)So how come appending load_async did not trigger our query in the background? Before explaining it, let’s first force asynchronous execution by running:

@users = User.slow.load_async; sleep 2; @usersand now finally we get the following output:

ASYNC User Load (0.0ms) (db time 1003.1ms)We can see the ASYNC prefix and (0.0ms) indicating that the query did not take any of the main thread processing time. 1006.5ms was spent in the background (described as db time). Let’s see how these numbers change when we modify sleep duration:

@users = User.slow.load_async; sleep 0.2; @usersASYNC User Load (807.8ms) (db time 1003.8ms)Our query was joined into the main thread after 200ms, so it took additional 807.8ms to complete execution. Just like the barebone threads we discussed earlier, asynchronous SQL can be joined into the main thread before it completes and, as a result, block it for the remaining of its execution time.

This is a critical distinction. load_async queries are never lazy loaded but work similarly to calling to_a on an ActiveRecord_Relation object in a separate thread. Calling load_async always sets a corresponding relation object’s loaded parameter to true. You can consult the PR implementing load_async for details. It means that load_async are always triggered immediatelly when called and joined into the main thread when their results are necessary.

Now you should understand why load_async sometimes does not schedule queries in the background. There are three cases possible:

- The query was fully executed in the background, we just use the result.

- The query started to be executed in the backgroud, we wait for it to finish.

- The query wasn’t started in the background yet, we execute it in the foreground.

While playing around with load_async API for the first time, I could not always force queries to run asynchronously. Extracting these simple examples helped me grasp what factors decide if the background thread will be triggered or not.

Now we can better understand why we’ve seen the following log output:

User Load (1008.7ms) ...

ASYNC Comment Load (62.1ms) (db time 1013.8ms) ...

ASYNC Post Load (0.0ms) (db time 1010.5ms) ...The results of the User query were displayed on top of the HTML view. So, before the corresponding query was scheduled in the background it moved to the main thread. While it was executing in a blocking way, the Comment and Post queries had time to complete their execution in the background and did not add any more blocking to the main thread. That’s where the ~3x speedup came from.

Now that we have a pretty solid understanding of the inner workings of load_async, let’s discuss how misusing it could blow up your application.

When not to load_async

load_async is a kind of horizontal scaling for our SQL pipeline. Instead of sequentially scheduling queries through a single connection, we’re using threads to execute multiple queries at the same time. You can illustrate the difference by digging into PostgreSQL pg_stat_activity metatable:

select pid,

application_name,

backend_start,

state

from pg_stat_activity

where state = 'active' and

application_name = 'bin/rails';The following SQL displays currently active client connections to your database established by the Rails console process. You can compare the outcome of this query for the following Ruby snippets (increasing the slow scope delay to a few seconds will make it easier to run both commands at the same time):

@users = User.slow

@comments = Comment.slow

@posts = Post.slow

puts @users

puts @comments

puts @postsShows:

pid | application_name | backend_start | state

-----+------------------+-------------------------------+--------

226 | bin/rails | 2022-02-23 00:10:34.900532+00 | activeand running:

@users = User.slow.load_async

@comments = Comment.slow.load_async

@posts = Post.slow.load_async

puts @users

puts @comments

puts @postswould display something similar to:

pid | application_name | backend_start | state

-----+------------------+-------------------------------+--------

226 | bin/rails | 2022-02-23 00:10:34.900532+00 | active

230 | bin/rails | 2022-02-23 00:12:30.44271+00 | active

231 | bin/rails | 2022-02-23 00:12:30.443108+00 | activeThis is how we can empirically check that asynchronous queries spawn more separate database connections.

Balancing database pool, threads and max connections for async queries (╯°□°)╯︵ ┻━┻

Things get a bit more complicated when we want to reason about the impact of running asynchronous SQL in the context of multithreaded processes like Puma server or Sidekiq worker. Rails provide a global pool config that can be defined inside a config/database.yml file. This value determines how many database connections can be spawned per Ruby process. Each Puma or Sidekiq worker is a single process that can initialize a predefined number of threads. A common suggestion is to set pool to the max value of concurrency supported by your application processes. For example, if your Sidekiq worker uses a maximum of 25 threads, then the pool value should be at least 25. Otherwise, your process might not be able to connect to the database because all the connections are busy, and the pool limit does not allow spawning more. As a result, you’ll see the following error:

ActiveRecord::ConnectionTimeoutError

(could not obtain a connection from the pool within 5.000 seconds (waited 5.003 seconds);

all pooled connections were in use):You might be wondering why cannot you set the pool to some arbitrarily high value so that there’s never a shortage of available connections?

The answer is that the database itself also has a global limit on how many concurrent clients it can handle. For example, the Heroku PostgreSQL addon imposes a hardcoded limit of MAX_CONNECTIONS setting per its database plan. AWS RDS gives much more flexibility on configuring the internals of PostgreSQL, and it’s just one of the reasons why I usually recommend migrating the Heroku database to AWS RDS.

I’m describing this setting in more detail because using load_async means that we’re using threads within threads…

Each asynchronous SQL query is a separate thread that needs a new connection. The subthreads are reused from an existing pool. There’s a new config option available:

config.active_record.global_executor_concurrencythat lets you define how many concurrent SQL queries can be executed per process. The math is getting a bit more confusing now so let’s try it with a simple example.

Imagine you’re using a single Performance-L Heroku web dyno running Puma server with recommended settings, i.e., 8 workers (processes) 5 max threads each. Additionally, you’re using a single Sidekiq worker on a Performance-M dyno with max concurrency configured to 10.

Without load_async, you should set the pool value to 10 so that your Sidekiq worker always has an active connection available. The max number of concurrent connections that your database might receive with this config is quick maths:

5 x 8 + 1 x 10 = 50

If you introduce load_async with the default global_executor_concurrency of 4 you’ll get:

8 x 5 + 8 x 4 + 1 x 10 + 1 x 4 = 86

and pool should be configured to 14 to account for possible additional connections from the Sidekiq process.

So you can see that by just enabling the new API, we almost doubled the potential number of maximum concurrent database clients. I hope that your current Heroku plan is ready for that…

But, it also means that each process can schedule max 4 asynchronous queries simultaneously. So if you try to parallelize three SQL queries inside your bottleneck web endpoint, you’ve already exhausted 3 out of 4 connections available for load_async. And that’s just one out of 5 Puma threads (we’re using a limit of 4 async connections per worker). So I’m not sure if this is a perfect config. At the same time, increasing global_executor_concurrency should always be performed carefully because each additional connection uses more database memory and decreases overall database performance.

Honestly, I don’t know what’s the perfect tradeoff here. But, I hope that this extended description of how these variables interact will give you a solid background for confidently tweaking them in your production app. Inspecting the number of currently active connections using the metadata SQL query and playing with different threading and pool settings together with Siege load testing can be a great way to better understand how these values impact each other.

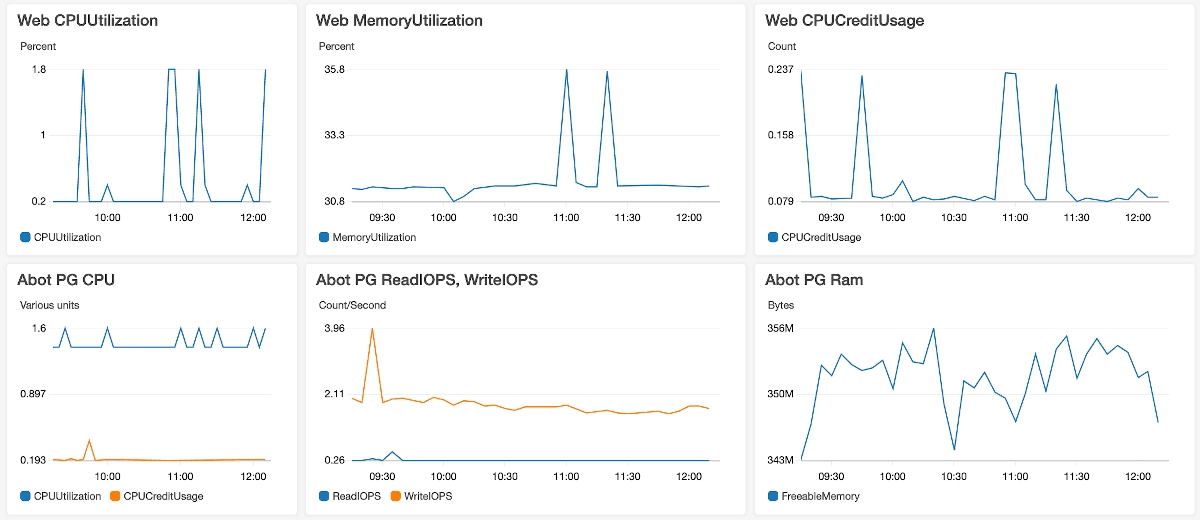

Comprehensive monitoring is critical if you want to introduce load_async to a production app with non-trivial traffic. I’d highly recommend adding alerts for a number of active database clients and memory usage. That’s another area where AWS RDS is superior to Heroku PostgreSQL addon thanks to seamless CloudWatch integration:

You can read more about moving your Heroku db to AWS RDS in my other blog post.

A recommended use case for load_async in production

Now we understand why asynchronous queries should always be introduced with care. Database connections are a precious resource and should only be assigned to hand-picked places where it’s likely to make the best overall impact.

You can use calls and outliers methods of Rails PG Extras to detect slow queries that are using a significant ratio of your db processing time. Then with the help of the marginalia gem, you can trace the endpoints that generate those queries. You can find more info about using Rails PG Extras for improving database performance in this blog post.

Once you have these pieces of data, you can decide if there are places that eat up most of your apps’ processing time, and the time is spent in the database layer. In that case, it’s possible that slow queries could be parallelized and result in a significant overall improvement.

Another suitable use case for async SQL are endpoints that, in addition to slow queries, perform 3rd party HTTP requests. Adding load_async to an SQL query statement before an HTTP call would effectively parallelize it reducing a response time.

Continuous observation and iterative approach are critical when applying performance fixes for non-trivial Rails apps. Before adding load_async to any of the queries, you should double-check if speeding it up and paying the price of additional database connections is potentially worth it.

Miscellaneous

Async eager loading for relations

N+1 queries are the top performance killer for Rails applications. Most of the time, they can be easily avoided by using so-called eager loading. I wrote about it in more detail in my other blog post.

Currently load_async does not seem to support eager loaded queries. Running:

@users = User.slow.includes(:comment).load_async; sleep 2; @usersProduces:

ASYNC User Load (0.0ms) (db time 1003.1ms)

Comment Load (20.0ms)So, relation seems to be loaded synchronously inside a main thread. But, anyway there would probably be little benefit for backgrounding eager loading queries because they are usually fetching data over well-indexed foreign keys. Right now a related PR appears to be on hold.

Asynchronous SQL inside a transaction

If you try to run an async query inside a transaction, it would fallback to synchronous execution:

ActiveRecord::Base.transaction do

@users = User.slow.load_async; sleep 2; @users

endProduces:

TRANSACTION (2.1ms) BEGIN

User Load (2005.3ms) ...

TRANSACTION (2.7ms) COMMITYou have to remember that the infamous ActiveRecord callback methods (excluding after_commit) are always implicitly wrapped in a database transaction. It means that asynchronous queries would never work there.

Another non-obvious context where all the calls are wrapped in a transaction is a Rails console sandbox mode. Running:

rails console --sandboxSpawns a new console process, and all the database changes are rolled back on exit. So, you have to remember that it’s impossible to test load_async inside a sandbox mode.

Summary

Before Rails 7, it was possible to wrap SQL calls into an asynchronous thread and add parallelism to database interactions. But, DIY solutions were a highly probable source of sneaky bugs. The new built-it way for scheduling async queries comes with additional thread-safety and data consistency guarantees. I believe that if used with care, load_async has the potential to significantly improve many typical performance bottleneck scenarios.

I did try to do in-depth enough research on how the load_async API works and include all the relevant info. But, given its newness and complexity, I’m sure new interesting facts start popping up once it becomes more widely adopted in production environments. I’m planning to keep this guide up to date, so please let me know if you find that some info is missing or inaccurate.