I’m not sure if the world needed another post about N+1 queries in Ruby on Rails. To make up for the cliche topic, I’ll describe less common solutions to this problem. Read on if you want to learn how to reduce the number of cascading N+1 SQL queries without using includes or additional table join operations.

N+1 queries 101

N+1 queries are the top performance killer for Rails applications. ActiveRecord makes it just too easy to misuse your database structure. Consider the following code:

# app/models/post.rb

class Post < ApplicationRecord

belongs_to :user

def author_name

user.name

end

end

# app/models/user.rb

class User < ApplicationRecord

has_many :posts

end

# app/controllers/posts_controller.rb

class PostsController < ApplicationController

def index

@posts = Post.published

end

endapp/views/posts/index.html.erb

<ul>

<% @posts.each do |post| %>

<li>

<%= post.title %>

<%= post.author_name %>

</li>

<% end %>

</ul>With the above implementation, you’ll see the following SQL queries in your logs:

Post Load SELECT "posts".* FROM "posts" WHERE "state" = "published"

User Load SELECT "users".* FROM "users" WHERE "users"."id" = 1 LIMIT 1

User Load SELECT "users".* FROM "users" WHERE "users"."id" = 2 LIMIT 1

User Load SELECT "users".* FROM "users" WHERE "users"."id" = 3 LIMIT 1

...An additional User Load SQL query is generated for every single loaded post. Since ActiveRecord needs additional data from another table to render the page, it will implicitly run those queries. If you’re loading dozens of objects, a single request could generate hundreds of SQL queries overwhelming your database and killing the performance.

To fix it, you’ll have to change your controller’s code to use so-called eager loading:

class PostsController < ApplicationController

def index

@posts = Post.published.includes(:user)

end

endAs a result, you’ll see the following SQL logs:

Post Load SELECT "posts".* FROM "posts" WHERE "state" = "published"

User Load SELECT "users".* FROM "users" WHERE "users"."id" IN (...)You’ll notice that regardless of the number of objects, only two queries are now generated. One fetches all the required posts, the other its users.

Now that we’ve covered the basics, let’s discuss the promised non-standard solutions to the N+1 problem.

Cache and reuse duplicate queries

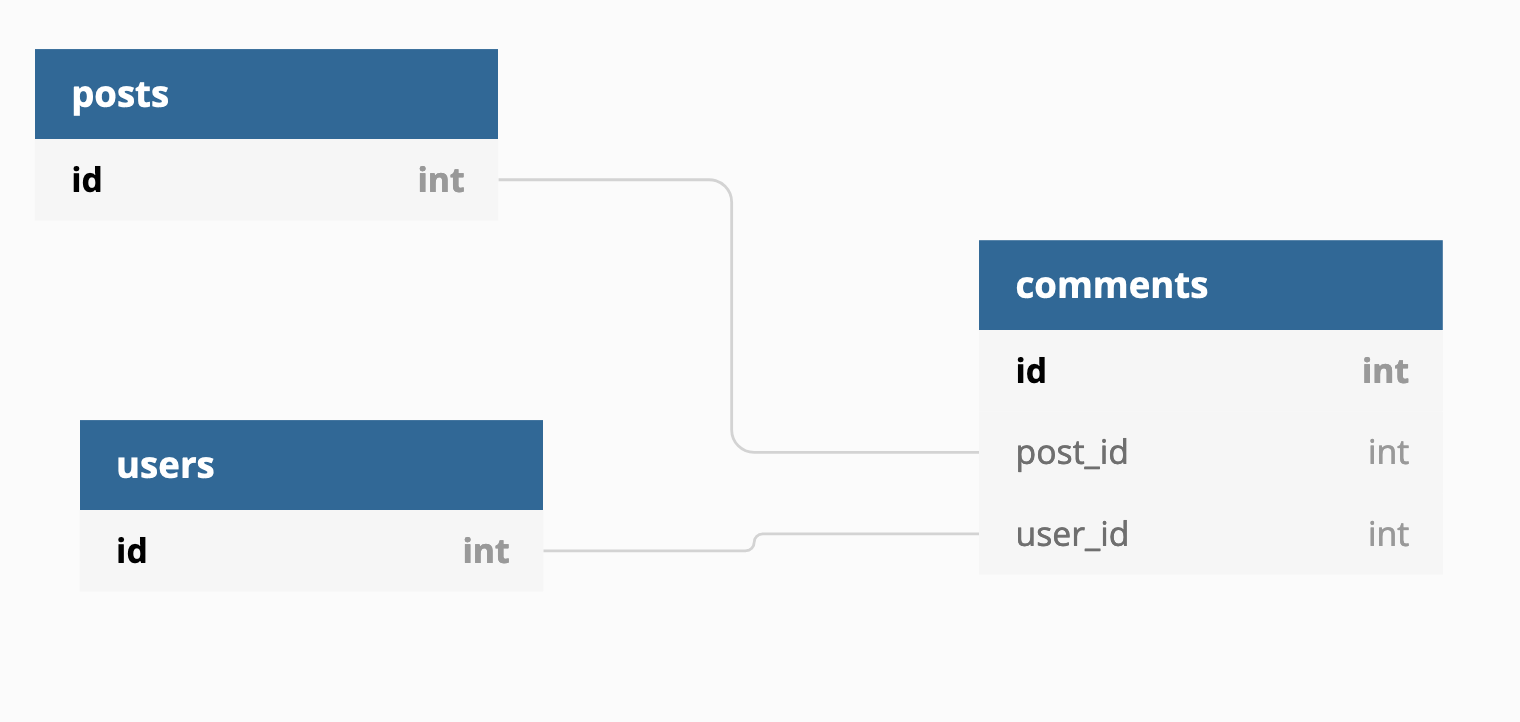

Spotting reusable query results is invaluable in optimizing the performance of your app. Consider the app with the following structure:

Posts have many Comments that belong to Users. Let’s assume that we have a page where we want to display users’ data and posts with comments.

app/controllers/posts_controller.rb

class PostsController < ApplicationController

def index

@posts = Post.published.includes(comments: :user)

@users = User.active

end

endapp/views/posts/index.html.erb

<div>

<% @users.each do |user| %>

<div>

<%= user.nickname %>

<%= user.email %>

</div>

<% end %>

<% @posts.each do |post| %>

<div>

<%= post.title %>

<%= post.content %>

<%= post.comments.each do |comment| %>

<%= comment.content %>

<%= comment.user.nickname %>

<% end %>

</div>

<% end %>

</div>The above code is already eager loading all the required data and will generate four queries. One to load posts, one for comments, and two to load users’ data.

Assuming that users’ data loaded by two queries is identical, we could rewrite the above example in the following way:

app/controllers/posts_controller.rb

class PostsController < ApplicationController

def index

@posts = Post.published.includes(:comments)

@users = User.active

@users_cache = @users.reduce({}) do |agg, user|

agg[user.id] = user

agg

end

end

endapp/views/posts/index.html.erb

<div>

<% @users.each do |user| %>

<div>

<%= user.nickname %>

<%= user.email %>

</div>

<% end %>

<% @posts.each do |post| %>

<div>

<%= post.title %>

<%= post.content %>

<%= post.comments.each do |comment| %>

<%= comment.content %>

<%= @users_cache.fetch(comment.user_id).nickname %>

<% end %>

</div>

<% end %>

</div>You render comments’ user data from an instance variable @users_cache generated from data fetched by a single query. This approach will cut an unnecessary Users query while still avoiding the N+1 issue.

Make sure always to measure the results of similar refactorings in production environment. In this particular example, we’ve removed an additional query. However, it might turn out that constructing the @cached_users hash could be slower than fetching the duplicate data using SQL.

I understand that the above example might seem a bit convoluted. However, in mature Rails apps, it’s not uncommon to fetch different nested models with interdependent relations to render a more complex HTML view.

You can check out this blog post for more info on how to speed up ActiveRecord performance by caching subqueries.

Use primitive values instead of objects

Let’s consider the following scope method:

class Post < ApplicationRecord

scope :by_same_author, -> (post) {

where(user_id: post.user.id)

}

endCan you spot what’s wrong?

The problem is that you cannot use this method without executing an additional query. You’re passing a full-blown ActiveRecord Post object that must instantiate another User object from its relation.

To minimize the number of unnecessary queries, you could rewrite this method like that:

class Post < ApplicationRecord

scope :by_author, -> (user_id) {

where(user_id: user_id)

}

endNow you’re passing primitive values. Fewer queries and less memory are needed to execute this method. Designing your public APIs on low-level objects will make them simpler to use and potentially more performant.

Add shortcut relationships

The Law of Demeter is not always strictly followed in legacy Rails apps. It’s not uncommon to encounter code like that:

class Post < ApplicationRecord

belongs_to :user

def author_last_active_at

user.account.activity.last_active_at

end

endExecuting this method will generate three additional SQL queries to fetch and instantiate required objects. These kinds of methods are the worse. Rendering even a dozen of objects could potentially overflow your database with hundreds of queries.

A potential solution to this issue is to add a shortcut to your database structure to account for data access requirements. If your Post model necessarily needs to access data from Account and additional queries are killing the performance, you can consider creating a direct connection between models. It’s kind of a dirty trick, but it could help you speed things up significantly for bottleneck endpoints.

With simplified relationships, the above example could now look like that:

class Post < ApplicationRecord

belongs_to :user

belongs_to :activity

def author_last_active_at

activity.last_active_at

end

endWatch out, since overusing this approach can quickly lead to your database structure becoming an unmaintainable mess.

Duplicate relationship data

An even more extreme variant of the above technique is duplicating data and putting it directly in a model that needs it. The upside is that it allows you to eliminate any additional queries completely. In this approach Post model itself is a caching layer for the Account model attribute.

However, it comes with a separate range of challenges for syncing data between models. You’ll have to update the Post object whenever his Account changes. I’d only resort to this technique for some extreme bottleneck endpoints for which reducing an additional query will make a difference.

Summary

Adding includes to your database call is usually the most straightforward and best choice for common N+1 issues. However, for some more rare and complex cases, these other techniques could be worth considering.