Improving the performance of a Rails application can be a challenging and time-consuming task. However, there are some config tweaks that are often overlooked but can make a significant difference in response times. In this tutorial, I will focus on a few “quick & easy” fixes that can have an immediate impact on the speed of your Rails app.

Eliminate request queue time

Request queue time means that your web servers don’t have enough capacity to process the incoming traffic. Unless you’re using a PAAS platform like Heroku, you have to add an extra NGINX header to enable request queuing data in the logs:

proxy_set_header X-Request-Start "t=${msec}";This config will enable any popular APM monitoring tools to report the current queue time. Without it, it’s impossible to know the real backend response time as experienced by your users.

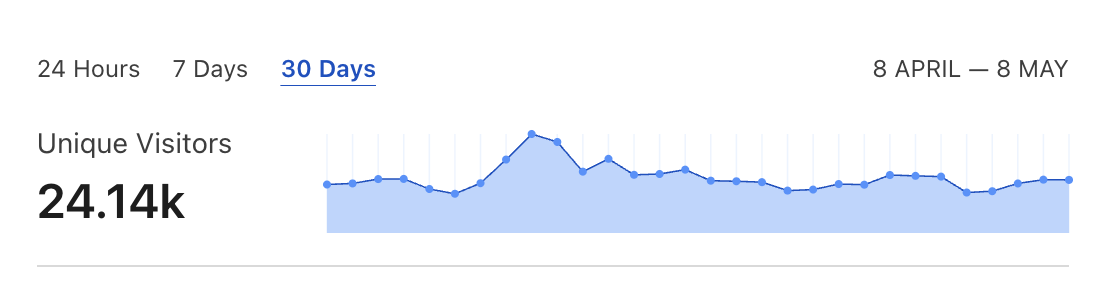

Request Queuing metricThe presence of queue time is both good and bad news. Your consumers experience longer response times, but resolving it by spinning up more server instances is usually possible. Before throwing money at a dozen new Heroku dynos, double-check if you cannot extract more throughput without increasing the cost.

These Heroku docs recommend values of WEB_CONCURRENCY and RAILS_MAX_THREADS for different dyno types. It’s worth noting the Performance-M dynos offer the worst cost/metrics and should usually be replaced by a single Performance-L or a fleet of Standard-2X.

I usually try to squeeze enough Puma workers so that RAM usage never spikes above 80%. A relatively simple way to decrease memory usage and prevent leaks is to use Jemalloc instead of the default memory allocator. I’ve seen memory usage drop by 15-20% after adding Jemalloc. It usually allowed us to safely add one more Puma worker for the same server/dyno type and increase the app’s throughput. You can check out my other blog post for more info on how to use Jemalloc in Rails apps and other tips on reducing memory usage.

Enable cache and Redis connection pool

Unless you’re on the newest Rails, your app’s caching layer may be misconfigured. Production Rails apps often use Redis or Memcache as their in-memory cache backend. But, the default config of the cache client is single-threaded. A default support for connection pool was added around a year ago. So, if you’re using Rails < 6.1 with a Puma web server, multiple Puma threads compete for a single cache client connection.

You can enable a connection pool for your caching client with similar config options:

config/production.rb

config.cache_store = :mem_cache_store, "cache.example.com",

pool: { size: 5, timeout: 5 }If your app uses Redis directly, it could have the same problem. It’s a popular pattern to expose Redis client via a global variable:

$redis = Redis.new(url: ENV.fetch("REDIS_URL"))

value = $redis.get("key-name")But it means any interaction with it will be throttled in multithreaded processes like Puma or Sidekiq. Redis usually responds in ~1ms, but if you’re using it extensively, dozens of blocked calls could add up to a measurable overhead.

You can resolve it by leveraging a connection-pool gem:

$redis_pool = ConnectionPool.new(size: ENV.fetch("RAILS_MAX_THREADS", 5)) do

Redis.new(url: ENV.fetch("REDIS_URL"))

end

value = $redis_pool.with do |conn|

conn.get("key-name")

endFine-tune database connections pool

Balancing the number of web servers and background worker threads with the database pool and max connections can be challenging. pool config from config/database.yml file determines how many database connections can be opened by a single Ruby process.

If you’re using a multithreaded process like Puma or Sidekiq, you need to make sure that the value of pool is at least equal to the maximum number of threads. Otherwise, your app could start raising ActiveRecord::ConnectionTimeoutError if it cannot establish a database connection for the specified timeout. The sum of possible connections should not be larger than the max_connections PostgreSQL setting. But you cannot set max_connection to any arbitrary value. Multiple concurrent connections will increase the load on a database, so its specs must be adjusted accordingly.

It’s possible to oversee pool misconfiguration. For example, threads could be waiting for database access for a period shorter than timeout, adding performance overhead but never raising the error.

You must be extra careful when using a load_async API introduced in Rails 7. This is because it schedules threads within threads and exhausts more database connections. You can read about it in more detail in my other blog post.

Customize PostgreSQL config

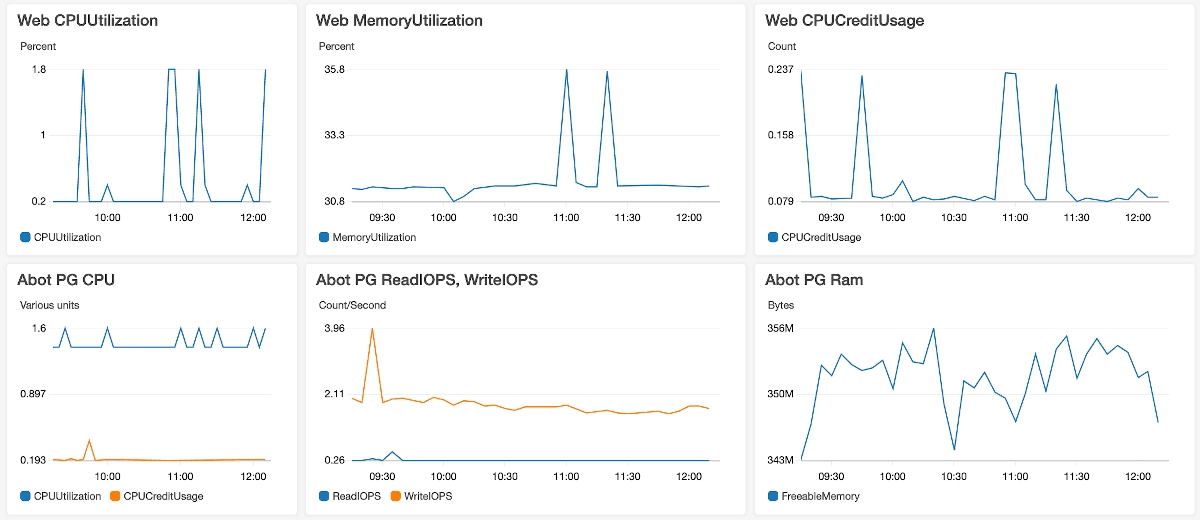

Depending on what PostgreSQL provider your app uses, you might be able to tweak the database config. More extensive control is another reason why I recommend migrating the Heroku database to RDS.

If your PostgreSQL is handling larger datasets, tweaking the default config will likely increase SQL performance. A few examples:

random_page_cost is set to 4.0 by default. It determines how likely a query planner is to use an index scan instead of a sequential scan. The default value of 4.0 originates from IO read speed of HDD disks. It is no longer relevant when databases use much faster SSDs for storage. Setting this value between 1.1-2.0 will make better use of database indexes and reduce CPU-intensive sequential scans.

work_mem determines how much RAM is available per active connection. The default value is 4096kb. Depending on your app’s usage patterns, this setting might throttle SQL queries doing more complex in-memory sorting and hash table operations. This value has to be tweaked carefully because it could cause out-of-memory issues for a database. But, ensuring that each connection has enough RAM will likely increase the performance of more complex queries.

shared_buffers and effective_cache_size settings determine how much database RAM is delegated for caching. Performant databases should read ~99% of data from the in-memory cache. You can use rails-pg-extras cache_hit to inspect your app’s database cache usage. For some cases increasing the default value is likely to result in a speedup for the database layer.

Optimize HTTP layer config

All the previous tips are related to backend layer performance. But, a typical web app issues dozens of requests on initial load. And only a few are backend-related, i.e., website HTML, API calls, etc. The majority of requests are static assets, JavaScript libraries, and images. Therefore, optimizing the frontend-related requests can have a much greater effect on the perceivable performance than fine-tuning the backend.

Check out my other blog post for more detailed tips on optimizing frontend performance. But, since I’ve promised “quick and easy” fixes, let’s cover two potentially most impactful frontend config tweaks.

Client-side caching

Correctly configuring client-side caching could be the most critical frontend optimization. There’s no better way to speed up the HTTP request than not to trigger it. But I’ve seen it misconfigured in multiple production apps. Rails have a great mechanism to leverage client-side caching, i.e., MD5 digest. In the production environment, the application.js file becomes application-523bf7...95c125.js. The random suffix is generated based on the file contents, so it is guaranteed to change if the file changes. You must add the correct cache-control header to make sure that once downloaded, the file will persist in the browser cache:

cache-control: public, max-age=31536000, immutableThe immutable parameter ensures that the cache is not cleared when the user explicitly refreshes the website on the Chrome browser.

You have to enable it in your Rails production config:

config/production.rb

config.static_cache_control = 'public, max-age=31536000, immutable'

config.public_file_server.headers = {

'Cache-Control' => 'public, max-age=31536000, immutable',

'Expires' => 1.year.from_now.to_formatted_s(:rfc822)

}Or if you’re using NGINX as a reverse proxy, you can use the following directive:

location ~* \.(?:ico|css|js|gif|jpe?g|png|woff2)$ {

add_header Cache-Control "public, max-age=31536000, immutable";

try_files $uri =404;

}I’ve seen apps using Etag and Last-Modified headers instead of Cache-Control. Etag is also generated based on the file contents, but the client has to talk to the server to confirm that the cached version is still correct. It means that on every page visit, the browser has to issue a request to validate its cache contents and wait for 304 Not Modified response. This completely unnecessary network roundtrip can be avoided by adding a Cache-Control header.

Use Cloudflare CDN

Using CloudFlare for your DNS together with proxying enabled could be the simplest way to get most of your frontend-related config in order. It will be especially impactful if you’re using Heroku, which still does not support HTTP2 or even native Gzip compression… (╯°□°)╯︵ ┻━┻

Client-side caching with Cloudflare proxy will result in your static assets getting cached in CDN edge locations close to your end users. Subsequent requests for the same assets will not be triggered because data is now cached in the browser.

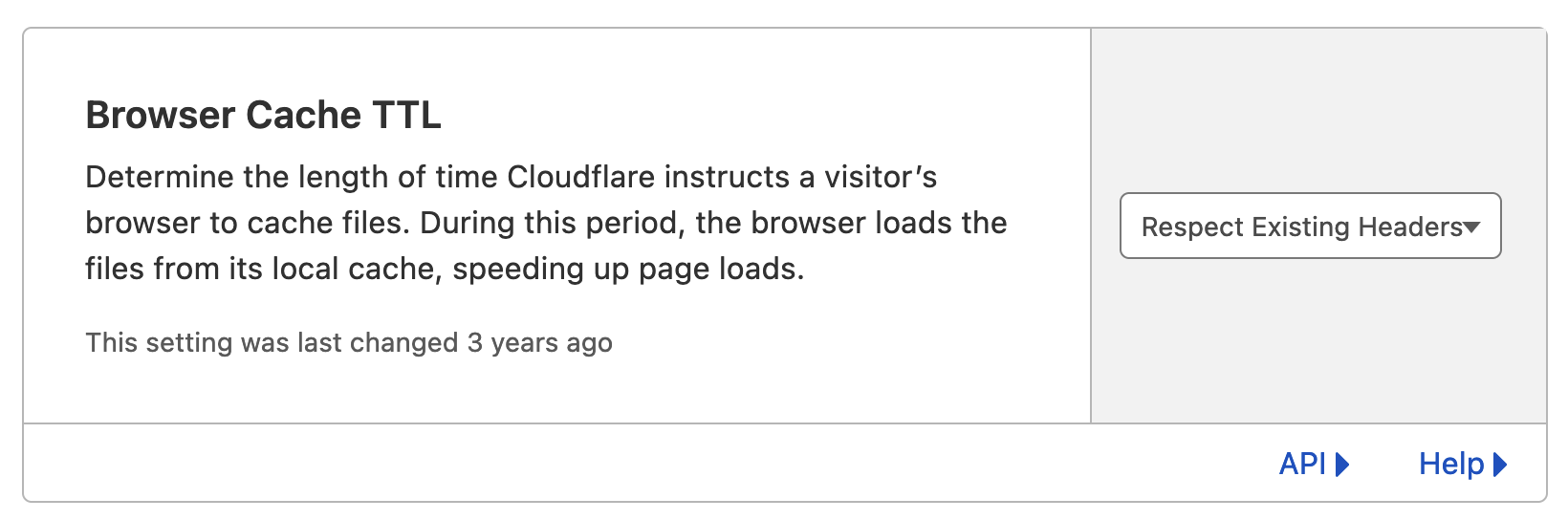

Please remember to use Respect existing headers for Browse Cache TLL setting to regain more fine-tuned control and avoid stale cache issues.

In addition to global CDN CloudFlare will enable to following benefits for your app:

- automatic gzip/brotli compression

- HTTP2 support

- DDOS protection

- Signed Exchanges SXGs support that can benefit Largest Contentful Paint (LCP) metric and SEO (available only for paid plans)

And many features more.

You can use Page Speed insights and WebPageTest to get a quick overview of your app’s fronted performance.

Summary

Optimizing the mentioned configs is a great starting point for in-house performance tuning. They are likely to globally impact the performance metrics, so the development time spent tweaking them will probably yield measurable benefits.