PostgreSQL database locks usually work seamlessly until they don’t. Before your Rails app dataset and traffic reach a certain scale, you’re unlikely to face any locks-related issues. But if your app suddenly slows down to a crawl, deadlocks likely are to blame. In this blog post, I’ll describe how to monitor database locks and propose best practices to prevent them from causing issues.

PostgreSQL database locks 101

Locks in PostgreSQL are a mechanism for ensuring data consistency in multithreaded environments. This blog post is not trying to be a comprehensive introduction to how locks and related MVVC (Multi-Version Concurrency Control) system works in PG databases. Instead, I’ll focus on the basics to help us understand how locks affect Ruby on Rails apps’ performance and stability.

It’s important to understand that every single write to the database acquires a lock. Well-behaved databases usually commit changes in a few ms, so observing related locks is not straightforward. As a workaround, you can simulate a long-lasting lock by running a similar code in the Rails console:

user = User.last

User.transaction do

user.update(email: "email-#{SecureRandom.hex(2)}@example.com")

sleep 25

endNow in a separate Rails console process run the locks method from rails-pg-extras gem:

RailsPgExtras.locks(in_format: :hash)

# [

# {

# "pid": 64,

# "relname": "users_pkey",

# "transactionid": null,

# "granted": true,

# "mode": "RowExclusiveLock",

# "query_snippet": "UPDATE \"users\" SET \"email\" = $1 WHERE \"users\".\"id\" = $2",

# "age": "PT0.954817S",

# "application": "bin/rails"

# },

# {

# "pid": 64,

# "relname": "users",

# "transactionid": null,

# "granted": true,

# "mode": "RowExclusiveLock",

# "query_snippet": "UPDATE \"users\" SET \"email\" = $1 WHERE \"users\".\"id\" = $2",

# "age": "PT0.954817S",

# "application": "bin/rails"

# },

# {

# "pid": 64,

# "relname": "index_users_on_email",

# "transactionid": null,

# "granted": true,

# "mode": "RowExclusiveLock",

# "query_snippet": "UPDATE \"users\" SET \"email\" = $1 WHERE \"users\".\"id\" = $2",

# "age": "PT0.954817S",

# "application": "bin/rails"

# },

# {

# "pid": 64,

# "relname": "index_users_on_api_token",

# "transactionid": null,

# "granted": true,

# "mode": "RowExclusiveLock",

# "query_snippet": "UPDATE \"users\" SET \"email\" = $1 WHERE \"users\".\"id\" = $2",

# "age": "PT0.954817S",

# "application": "bin/rails"

# },

# ...

# ]As you can see, even a straightforward single-column update operation acquires an array of RowExclusiveLock locks on a corresponding table and all the indexes. Usually, this process works seamlessly behind the scenes. A lock affecting a single row is not critical (apart from slowing down a single request/job) as long as it’s not blocking other queries.

But let’s see what happens when we try to update the same row concurrently.

In the first Rails console process run:

user = User.last

User.transaction do

user.update(email: "[email protected]")

sleep 25

endThen in the second one:

User.last.update(email: "email-#{SecureRandom.hex(2)}@example.com")and now, in the third Rails console process, we can investigate the collision of concurrent update operations:

RailsPgExtras.blocking(in_format: :hash)

# [

# {

# "blocked_pid": 64,

# "blocking_statement": "UPDATE \"users\" SET \"email\" = $1 WHERE \"users\".\"id\" = $2",

# "blocking_duration": "PT20.450116S",

# "blocking_pid": 152,

# "blocked_statement": "UPDATE \"users\" SET \"email\" = $1 WHERE \"users\".\"id\" = $2",

# "blocked_duration": "PT8.145238S"

# }

# ]As you can see, the acquired lock prevents the second SQL statement from finishing. It’s reported by blocking method from rails-pg-extras. In practice, a web server request trying to execute this SQL statement would be stuck until the first lock is released. This is how misbehaving locks can kill the performance of your application or even cause downtime by exhausting available web server connections.

Monitoring PostgreSQL locks in production

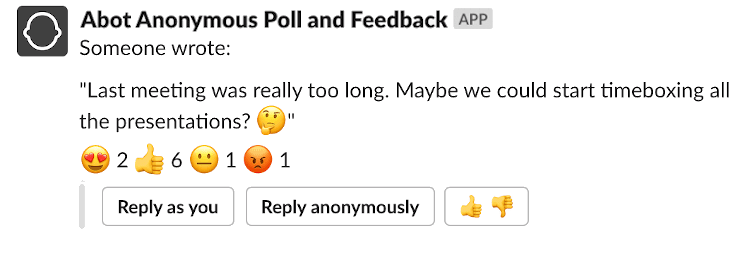

You’re unlikely to sprinkle sleep all over your codebase to simplify investigating database locks. Here’s a sample implementation of a Sidekiq job that can help you monitor long-lasting locks and blocked SQL statements in production:

config/initializers/pg_monitoring.rb

LocksMonitoringJob.perform if ENV["PG_MONITOR_LOCKS"] == "true"app/jobs/locks_monitoring_job.rb

class LocksMonitoringJob

include Sidekiq::Worker

sidekiq_options retry: false

PG_MIN_LOCK_DURATION_MS = ENV.fetch("PG_MIN_LOCK_DURATION_MS") { 200 }.to_f

PG_MONITORING_INTERVAL_SECONDS = ENV.fetch("PG_MONITORING_INTERVAL_SECONDS") { 5 }.to_i

SLACK_WEBHOOK_URL = ENV.fetch("SLACK_WEBHOOK_URL")

def perform

locks = RailsPgExtras.locks(in_format: :hash).select do |lock|

(ActiveSupport::Duration.parse((lock.fetch("age")).to_f * 1000) > PG_MIN_LOCK_DURATION_MS

end

if locks.present?

Slack::Notifier.new(

SLACK_WEBHOOK_URL,

channel: "pg-alerts"

).ping locks.to_s

end

blocking = RailsPgExtras.blocking(in_format: :hash).select do |block|

(ActiveSupport::Duration.parse((block.fetch("blocking_duration")).to_f * 1000) > PG_MIN_LOCK_DURATION_MS

end

if blocking.present?

Slack::Notifier.new(

SLACK_WEBHOOK_URL,

channel: "pg-alerts"

).ping blocking.to_s

end

ensure

LocksMonitoringJob.perform_in(PG_MONITORING_INTERVAL_SECONDS) if ENV["PG_MONITOR_LOCKS"] == "true"

end

endWe use an initializer file to schedule the first task and can optionally disable it by removing PG_MONITOR_LOCKS variable. The job schedules itself every configurable PG_MONITORING_INTERVAL_SECONDS and reports locks and blocked queries that cross a PG_MIN_LOCK_DURATION_MS threshold using slack-notifier gem (check out Slack docs for info on how to configure incoming webhooks). You can tweak the ENV variables to adjust monitoring sensitivity based on your app’s requirements.

If your app is running heavyweight background jobs, you’ll likely have to manually exclude some queries from being raported. And if your current database performance is far-from-perfect, you should bump up the PG_MIN_LOCK_DURATION_MS and gradually lower it when you manage fix the cause of long-lasting locks. Excluding background jobs and data migrations, a healthy database should acquire exclusive locks for a maximum of ~100-200ms.

Running a similar monitoring task can help you spot many lock-related issues early on before they start affecting end users.

How to resolve lock-related issues in Rails apps

So your Slack alerts channel is spammed with long-lasting locks and blocked query notifications. What now?

Overusing ActiveRecord::Base.transaction scope

I’ve seen misbehaving locks with a duration of a few seconds. Surprsingly, the underlying cause wasn’t even directly caused by the database layer. ActiveRecord makes it too easy to wrap any arbitrary chunk of code into a database transaction. Have a look at the following example:

app/models/user.rb

after_create :send_welcome_email

def send_email

EmailSender.send_welcome_email(self)

endActiveRecord callbacks are a usual way to trigger side effects based on the model object’s lifecycle. For example, sending a welcome email to newly registered users is a common use case. Let’s keep discussing whether similar logic belongs in a model aside for now. Instead, let’s focus on how the database layer will react to a similar implementation.

Something to watch out for is that excluding after_commit, all the AR callbacks are implicitly wrapped in a database transaction. So, the above implementation would keep a RowExclusiveLock on the user object for the whole time needed to connect to 3rd party API and send an email. So, instead of the expected max ~200ms threshold, our lock could last up to 60 seconds in case there’s an API timeout.

This example might seem contrived. But I’ve seen multiple Rails apps suffering from long-lasting locks caused by implicit 3rd party HTTP calls executed inside database transactions.

The hidden complexity of Rails ActiveRecord makes it too easy to commit similar mistakes, even for more experienced devs. That’s why proper locks monitoring can help you spot similar issues, even if they go unnoticed during the code review.

Other ways to fix long-lasting locks

Apart from limiting the scope of ActiveRecord transactions, the “simplest” way to decrease locks duration is to optimize the performance of the database layer. Check out this blog post for an in-depth guide on analyzing and fixing PostgreSQL performance with the help of rails-pg-extras gem.

But, fine-tuning SQL queries is often a more time-involved process. So, here’s a list of “quick & easy” ad hoc solutions to try before you manage to deploy proper fixes. These are “hacks”, but they might help if your app is currently unstable because of locks-related issues.

1. Throttle Sidekiq jobs

One way to limit the occurances of locks is to reduce the concurrency of database commits. It will limit the scalability and throughput of your app. But the tradeoff might be worth it if you’re experiencing locks-related downtimes.

I usually recommend implementing the Sidekiq config file in a similar way:

config/sidekiq.yml

:concurrency: <%= ENV.fetch("SIDEKIQ_CONCURRENCY") { 5 } %>

:queues:

- urgent

- default

# ...Even if you’re currently not planning to tweak the concurrency setting, using an ENV variable will allow you to easily change this value without doing a code-change release. A panic fix could be to throttle concurrency down to 1 to minimize concurrent locks’ impact before you apply the proper solution.

Alternatively, if you know which Sidekiq job is causing the issue, you could throttle it from running concurrently with the help of the sidekiq-unique-jobs gem. This is also a good solution for more heavyweight jobs to limit the max memory usage of Sidekiq.

2. Limit the app’s concurrency

Similar throttling can be done for web app processes. Limiting your app’s concurrency could reduce performance by increasing request queue time. But, it’s possible that the throttled app will be more performant because blocking SQL queries are limited.

You can implement your Puma web server config in a similar way to easily tweak settings without a need for code release:

config/puma.rb

threads_count = ENV.fetch("RAILS_MAX_THREADS") { 5 }

threads threads_count, threads_count

workers ENV.fetch("WEB_CONCURRENCY") { 2 }

# ...3. Kill stuck connections

RailsPgExtras.locks and RailsPgExtras.blocking could report that a single long-running query is blocking dozens of other database commits. Depending on the scale of the issue, a similar traffic jam could cause many of your web server connections to get stuck and your app to become unresponsive. A “panic button” solution for this issue that could potentially restore your app’s responsiveness is to kill the offending connection. rails-pg-extras blocking method provides a blocking_pid value you can use to cherry-pick and terminate the stuck SQL query. Additionally, you can use RailsPgExtras.connections to verify a process name that generated the problematic query. It could be helpful for debugging.

You can terminate an SQL query/connection based on its PID value by running the following code:

RailsPgExtras.kill_pid(args: { pid: 4657 })Optionally, if your database is a bad enough shape that killing a single connection is not enough, you can run:

RailsPgExtras.kill_allRemember to restart all the web and background worker processes to prevent ghost connections from hanging around.

As I’ve mentioned, these are not proper fixes, but rather “band-aids” that might help in case your app is unstable.

Summary

PostgreSQL database locks usually work seamlessly behind the scenes. But, following the mentioned best practices and configuring correct monitoring can save you a lot of headaches. A proactive approach to monitoring lock-related issues can help you resolve most of the problems before they start affecting your users.