Performance issues in the PostgreSQL layer usually start to manifest only when your app reaches a certain scale. In this blog post, I’ll discuss easy-to-miss database config tweaks and common problems that I’ve encountered in multiple Rails projects.

Tuning random_page_cost in PostgreSQL

random_page_cost is infamously misconfigured in the default PostgreSQL settings. This value represents how expensive it is to fetch a row using a database index. A related seq_page_cost represents the cost to fetch a row using an in-memory sequential scan. The default value of 4 for random_page_cost compared to 1 for seq_page_cost indicates that it’s significantly more expensive to fetch data using indexes (stored on disk). Unfortunately, this value comes from ancient times when databases still used HDD disks.

If your database uses an SSD disk (hint: it does), these default settings will likely harm your app’s performance. As a result your database is less likely to choose an index instead of a sequential scan. Seq scans are both CPU and memory-intensive. To find matching rows, the database engine has to well scan each row individually. It’s like doing a for loop over a large array instead of fetching a value based on its hash key.

Let’s dig a bit deeper and see how EXPLAIN query plan changes with different values for random_page_cost.

Understanding random_page_cost using query planner

Let’s assume we’re working with the following data model:

# db/migrate/***_create_users.rb

class CreateUsers < ActiveRecord::Migration::Current

def change

create_table :users do |t|

t.string :email, null: false

t.datetime :deleted_at

t.datetime :created_at

end

add_index :users, :deleted_at

end

end

# app/models/user.rb

class User < ApplicationRecord

endI’m using PostgreSQL version 14, and the users table was seeded to ~100k objects. ~10% of objects were marked as soft-deleted. You might get different results from a similar setup. This post aims not to accurately explain the PostgreSQL query planner but rather show how tweaking one specific config can affect it.

Let’s start by running the following snippet:

User.where("deleted_at IS NOT NULL").explain

# Index Scan using index_users_on_deleted_at on users

# (cost=0.29..409.32 rows=10173 width=165)

# Index Cond: (deleted_at IS NOT NULL)You can see that by default, this query uses a corresponding index on a deleted_at column. cost is estimated to be up to 409.32. It is an abstract way for PostgreSQL to rank different query plans and choose the one with the lowest cost.

You can check the current value of random_page_cost by running:

ApplicationRecord.connection.execute("SHOW random_page_cost")Let’s see what happens if we reduce random_page_cost from 4 to 1.1:

ApplicationRecord.connection.execute("SET random_page_cost TO 1.1")

User.where("deleted_at IS NOT NULL").explain

# Index Scan using index_users_on_deleted_at on users

# (cost=0.29..365.82 rows=10173 width=165)

# Index Cond: (deleted_at IS NOT NULL)You can see that the selected query plan did not change, but its theoretical cost was reduced from 409.32 -> 365.82. random_page_cost indicates how expensive it is to use an index, so reducing its value also reduces the cost.

Let’s now go all crazy and set it to 400:

ApplicationRecord.connection.execute("SET random_page_cost TO 400")

User.where("deleted_at IS NOT NULL").explain

# Seq Scan on users

# (cost=0.00..2682.64 rows=10173 width=165)

# Filter: (blocked_from IS NOT NULL)We’ve tricked the poor query planner into thinking that indexes are so ineffective that it had to fallback to a sequential scan. So, we’ve demonstrated that tweaking this value can influence query plan selection for the same dataset.

It’s worth noting that you can safely tweak values like that even on production. The change only affects the currently active session so it will be discarded when you close the Rails console. Please also remember that cost is a theoretical value used only to select the query plan; it does not directly affect query execution time.

How to disable index and sequential scans?

Now that we’ve covered the basics of how random_page_cost affects query plans, let’s investigate how tweaking enable_seqscan, enable_indexscan, and enable_bitmapscan works.

Let’s start with our previous example:

User.where("deleted_at IS NOT NULL").explain

# Index Scan using index_users_on_deleted_at on users

# (cost=0.29..409.32 rows=10173 width=165)

# Index Cond: (deleted_at IS NOT NULL)Nothing new to see here. Let’s now try to disable index scans:

ApplicationRecord.connection.execute("SET enable_indexscan TO off;")

ApplicationRecord.connection.execute("SET enable_bitmapscan TO off;")

User.where("deleted_at IS NOT NULL").explain

# Seq Scan on users (cost=0.00..2682.64 rows=10173 width=165)

# Filter: (deleted_at IS NOT NULL)As you can see, disabling index scans had the same effect as increasing the value of random_page_cost, i.e., the query planner had to use a sequential scan.

Let’s now test another case:

User.where("created_at IS NOT NULL").explain

# Seq Scan on users (cost=0.00..2682.64 rows=100064 width=165)

# Filter: (pseudonym IS NOT NULL)created_at column has no corresponding index, so we default to the sequential scan. Let’s now try to disable it:

ApplicationRecord.connection.execute("SET enable_seqscan TO off")

User.where("created_at IS NOT NULL").explain

# Seq Scan on users (cost=10000000000.00..10000002682.64 rows=100064 width=165)

# Filter: (pseudonym IS NOT NULL)Tweaking this flag skyrocketed the query cost by 10000000000. But you can see that despite “disabling” sequential scans, our query planner has no other choice but to keep using it. There’s no alternative plan possible.

Running EXPLAIN on bottleneck queries while tweaking different config values is a fantastic way to improve your understanding of the query planner.

Measuring the impact of changing random_page_cost

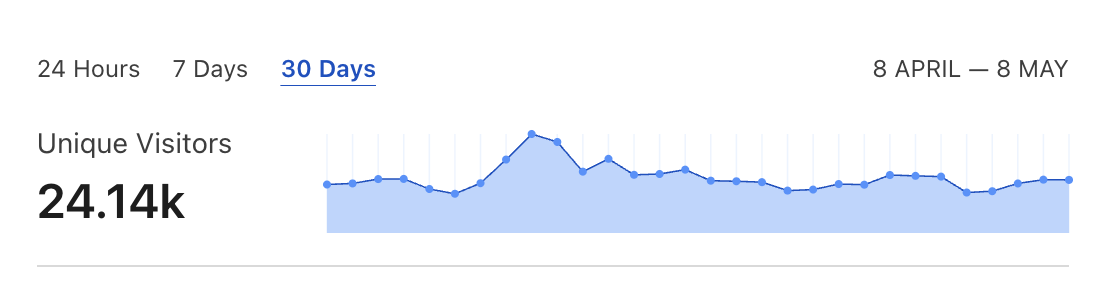

While conducting my Rails performance audits, I always try to showcase the impact of applied changes. Clients are usually not happy with just “it should feel faster now”, so I always try to show them a “chart going down”.

However, in complex systems, it’s not straightforward to isolate an effect of a single change from, e.g., periodical traffic fluctuations.

Tweaking random_page_cost will impact your system globally. But it might affect only a subset of queries, i.e., only have an effect for specific query arguments.

Luckily there’s a simple way to confirm that reducing this value from the default 4 to the recommended 1.1 positively affected the app. You can check the number of unused indexes before and after this change. To do it you can use the RailsPgExtras unused_indexes method:

RailsPgExtras.unused_indexes(in_format: :hash).countAlternatively you can save a list of unused indexes before and after this change to later verify which got activated. Depending on your app’s traffic and usage patterns you should probably wait a few hours or even days observe the impact of this change.

I’ve observed that the number of unused indexes reduced as a result of applying this change in multiple Rails projects. I’d risk saying that most Rails apps will likely benefit from changing random_page_cost from the default 4 to 1.1.

Null indexes

I’ve seen a range of improvements as an effect of removing unused indexes. Each index has a performance overhead, slowing down write operations, increasing autovacuum time, and increasing the duration of conflicting database locks. So removing non-unique indexes that don’t receive any scans is a no-brainer (one exception is indexes for small tables, i.e., with less than 100k objects).

But there’s a second category of indexes that’s often overlooked. These are indexes that contain mostly NULL values, so-called null indexes. RailsPgExtras null_indexes method can help you quickly check if your app has this issue.

A typical example of a null index is a foreign key of an optional relation. I’ve seen indexes with sizes of over 50GB where 99% of values were NULL. As a result of reindexing them with the NOT NULL condition, we’ve measured the same impact as from a complete removal of an unused index.

You can run the following SQL commands to get rid of NULL values from an index:

DROP INDEX CONCURRENTLY users_reset_token_id;

CREATE INDEX CONCURRENTLY users_reset_token_id_not_null ON users(reset_token_id)

WHERE reset_token_id IS NOT NULL;Adjusting work_mem PostgreSQL setting

According to PostgreSQL docs, work_mem “Sets the base maximum amount of memory to be used by a query operation”. Let’s see it in action:

User.where("deleted_at IS NOT NULL").order(:deleted_at).analyze

# SELECT * FROM users WHERE deleted_at IS NOT NULL ORDER BY discarded_at ASC

# Sort (cost=42398.84..42852.74 rows=181559 width=129) (actual time=433.045..613.256 rows=10000 loops=1)

# Sort Key: deleted_at

# Sort Method: quicksort Memory: 6081kB

# -> Seq Scan on users (cost=0.00..26539.59 rows=181559 width=129) (actual time=0.367..240.388 rows=10000 loops=1)

# Filter: (deleted_at IS NOT NULL)

# Rows Removed by Filter: 90102In these examples, we’re using the analyze method to get the exact query time. Unless you’re on the newest Rails 7.2.0, you’ll have to use my activerecord-analyze gem to enable it.

You can see that as a result of the ORDER BY keyword inside a query, our EXPLAIN plan reports an in-memory Sort operation using ~6000kb of memory. Let’s now see what will happen if we decrease the available work_mem:

ApplicationRecord.connection.execute("SET work_mem='64kB'")

User.where("deleted_at IS NOT NULL").order(:deleted_at).analyze

# SELECT * FROM users WHERE deleted_at IS NOT NULL ORDER BY deleted_at ASC

# Sort (cost=25975.28..25975.29 rows=181559 width=129) (actual time=2021.479..2917.788 rows=10000 loops=1)

# Sort Key: deleted_at

# Sort Method: external merge Disk: 4328kB

# -> Seq Scan on users (cost=0.00..25975.28 rows=1 width=129) (actual time=1.662..960.472 rows=10000 loops=1)

# Filter: (deleted_at IS NOT NULL)

# Rows Removed by Filter: 90102Now, our active session does not have enough RAM available to perform the Sort in-memory. As a result, it’s falling back to on-disk sort, which is over 4x slower than the previous example 613 -> 2917.

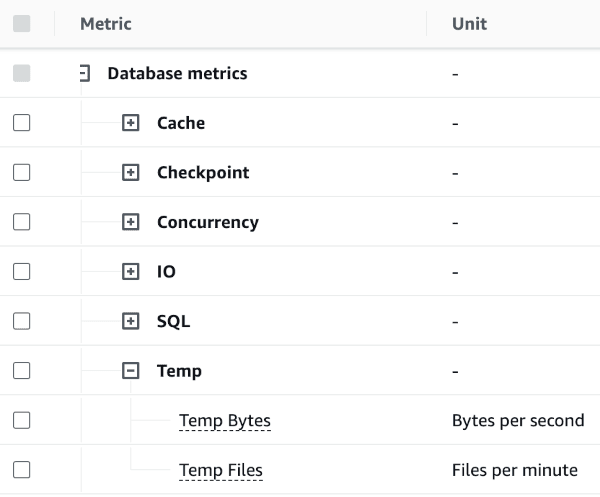

If you’re using an AWS RDS database, you can check if your application is currently using on-disk temp files because the memory cap is too low. This metric is a bit hidden. First, go to RDS Performance Insights, then click Metrics, select Custom dashboard, and then Add widget. You can expand Database metrics -> Temp and add both Temp bytes and Temp files to your dashboard.

If your app is currently using the default work_mem value of 4MB it’s likely that your database does slow on-disk operations for a subset of queries. Please be aware that not only ORDER BY, but also DISTINCT and IN SQL keywords can trigger such behavior. You can confirm that by analyzing the RDS metrics mentioned above.

PGTune is your best friend for determining the correct value of work_mem for your database. But contrary to the random_page_cost, you should not set the target value right away. Instead I suggest increasing the value in steps by 2x while observing the database memory usage (ideally with correct Cloudwatch alerts configured). Increasing work_mem does not mean that each connection will suddenly start maxing out its memory limits. But if your database is underscaled, then increasing work_mem by 16x could cause the out of memory issues or even downtime. Luckily, this is a dynamic setting, so you can tweak its value without restarting the database.

Thanks to the Temp files and Temp bytes metrics you should be able to observe a beautiful “chart going down” as a result of tweaking this setting. Additionally, you can measure which queries were affected using the RailsPgExtras outliers method. First, you have to run:

RailsPgExtras.pg_stat_statements_resetTo start gathering fresh data for a few days. Later, tweak the value, run reset again, and compare results after the same period. You should see some queries with affected keywords (ORDER BY, DISTINCT, IN) disappear or see their total prop_exec_time significantly reduced.

Summary

By applying these techniques, you should be able to observe measurable improvements if your Rails app uses a larger dataset. I hope that the described ways to monitor the impact of PostgreSQL configuration tweaks will give you more confidence to try it in your project.