Heroku offers a robust backups system for it’s Postgres database plugin. Unfortunately, you can irreversibly lose all your data and backups just by typing a single command. It might seem improbable, but still, I would rather not bet my startup’s existence on a single faulty bash line. In this tutorial, I will describe how to set up a proprietary redundant Heroku PostgreSQL backups system to a secure AWS S3 bucket.

I will be covering various tools including AWS CLI, OpenSSL, GPG, Heroku buildpacks, and scheduler but you don’t need to be familiar with any of those. By following this guide, you will set up a reliable, custom backups system for Heroku PostgreSQL database even if you don’t have much dev ops experience up your sleeve.

Let’s get started!

How to lose all the Heroku Postgres data

Though the first paragraph is a bit “clickbaity”, it’s actually true. Just by typing:

heroku apps:destroy app-name --confirm app-nameyou can completely destroy the Heroku app including all it’s add-ons, databases and backups data:

Read on if you’d like to safeguard yourself against a potential total loss of your startup’s data.

Set up an encrypted AWS S3 bucket

Our redundant backups system will periodically upload encrypted snapshosts of the PostgreSQL database to a secure AWS S3 bucket.

Let’s start with adding a correctly configured S3 bucket. For a more in-depth tutorial on how to work with AWS S3 buckets, you can check out my other article.

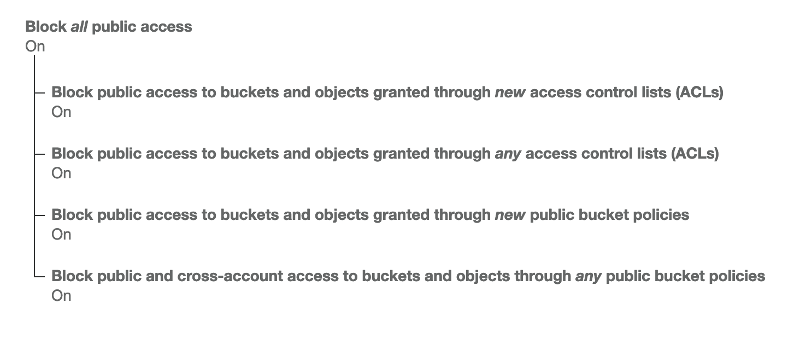

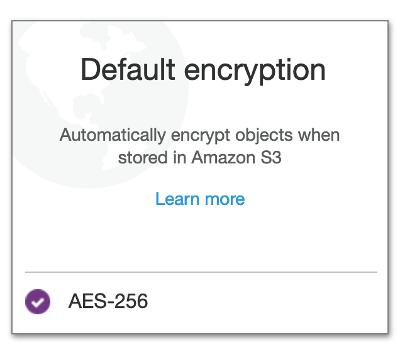

Make sure to disable public access and enable encryption for your new S3 bucket:

Add an IAM user

You will need Amazon AWS credentials to upload the backup dump to S3 bucket. One common mistake is to use your primary account credentials instead of creating an IAM user with limited permissions.

Check out the official docs for info how to add the IAM user. For the backups system to work securely, you should use a custom policy for the IAM user. Check out my other tutorial for more in depth info about configuring correct access rights on S3. To continue with the tutorial you can add the following inline policy granting the user permissions for the single bucket only:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::your-backups-bucket"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:PutObjectAcl"

],

"Resource": [

"arn:aws:s3:::your-backups-bucket/*"

]

}

]

}Make sure to copy both AWS Access Key ID and AWS Secret Access Key generated because we’ll need them later.

Let’s move on to writing the actual backup script:

Backup bash script

We’ll need to install and configure AWS CLI buildpack to upload our backup file to S3 from the Heroku dyno.

heroku buildpacks:add heroku-community/awscli

heroku config:set AWS_ACCESS_KEY_ID=[Your AWS Access Key ID]

heroku config:set AWS_SECRET_ACCESS_KEY=[Your AWS Secret Access Key]

heroku config:set AWS_DEFAULT_REGION=[Your S3 bucket region]

heroku config:set S3_BUCKET_NAME=[Your S3 bucket name]Now, add another buildpack to enable Heroku CLI access from within the script:

heroku authorizations:create => TOKEN

heroku config:set HEROKU_API_KEY=[TOKEN]

heroku buildpacks:add heroku-community/cliYou also need to set a secure password that will be used to encrypt the database dump files before uploading them to S3. You can use OpenSSL for that:

heroku config:set PG_BACKUP_PASSWORD=$(openssl rand -base64 32)Just make sure to save this password somewhere safe. Otherwise that destructive one-liner will also prevent you from decrypting the secondary backup.

You must also set your Heroku app name because it will be used by Heroku CLI to download the latest backup:

heroku config:set APP_NAME=app-nameNow let’s see the actual script bin/pg_backup_to_s3:

# Set the script to fail fast if there

# is an error or a missing variable

set -eu

set -o pipefail

#!/bin/sh

# Download the latest backup from

# Heroku and gzip it

heroku pg:backups:download --output=/tmp/pg_backup.dump --app $APP_NAME

gzip /tmp/pg_backup.dump

# Encrypt the gzipped backup file

# using GPG passphrase

gpg --yes --batch --passphrase=$PG_BACKUP_PASSWORD -c /tmp/pg_backup.dump.gz

# Remove the plaintext backup file

rm /tmp/pg_backup.dump.gz

# Generate backup filename based

# on the current date

BACKUP_FILE_NAME="heroku-backup-$(date '+%Y-%m-%d_%H.%M').gpg"

# Upload the file to S3 using

# AWS CLI

aws s3 cp /tmp/pg_backup.dump.gz.gpg "s3://${S3_BUCKET_NAME}/${BACKUP_FILE_NAME}"

# Remove the encrypted backup file

rm /tmp/pg_backup.dump.gz.gpgDepending on your S3 bucket location you might have to change s3-eu-west-1 to other region.

Now make the file executable by typing:

chmod +x bin/pg_backup_to_s3Before you test it make sure you have the up to date backup by running:

heroku pg:backups:captureYou can try to run the script locally if you set all the correct shell variables.

Now commit the changes to your repo and deploy them to Heroku. You can now test your script in Heroku environment by typing:

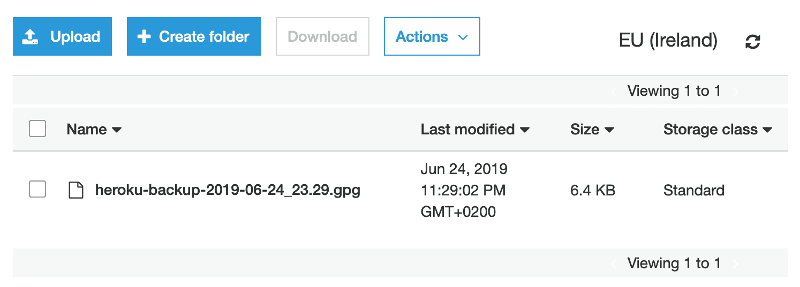

heroku run ./bin/pg_backup_to_s3After the script execution you should see your encrypted backup file on the S3 bucket!

Troubleshooting

The script is a bit complex so if you fail to upload the backup file to S3 you can try the following:

- change

set -eutoset -euxfor more verbose script output - leave a comment, took me a while to make it work, happy to help here ;)

Implementation without additional Heroku buildpacks

You can avoid the Heroku CLI buildpack by using the pg_dump directly on the database:

pg_dump -Fc $DATABASE_URL > /tmp/pg_backup.dumpYou might run into compatibility issues if your database version if different than one supported by the pg_dump currently installed on your Heroku dynos.

To upload to the S3 bucket without installing the AWS CLI, you could generate a one-time upload URL in the backend and pass it to the script. Later you could upload the file via cURL. Details on how to implement it are beyond the scope of this tutorial. You can find tips on client-side uploads with pre-signed URLs in my previous post.

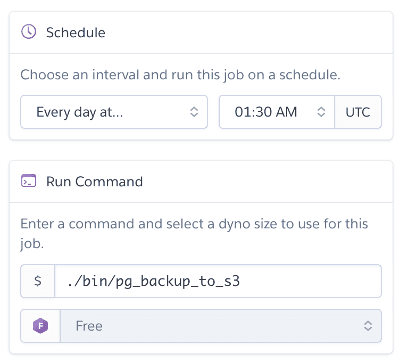

Use Heroku scheduler for automatic script execution

You can now run the backup script manually, let’s make it automatic. Heroku Scheduler is a cron like tool to run Heroku jobs in predefined time periods.

You can add it by typing:

heroku addons:create scheduler:standard

heroku addons:open schedulerand configure it like that:

To make sure that the newest database dump will be stored daily you can schedule Heroku backup to take place just before the scheduler script execution:

heroku pg:backups:schedule DATABASE_URL --at '01:00'That’s it. Now your Heroku PostgreSQL will be backed up daily to your own secure S3 bucket. You might also want to consider adding a bucket lifecycle rule to remove the older files and optimize storage costs.

How to restore Heroku S3 PostgreSQL backup

It is a good practice to double check that your backups actually work and can be restored in case they are needed. Let me show you how to do it.

AWS CLI configuration

AWS CLI will be needed to restore the backup. You can install it locally by following this tutorial.

Now authenticate the AWS CLI by running:

aws configureand inputting your IAM user AWS Access Key ID and AWS Secret Access Key. You can just press ENTER when asked to provide Default region name and Default output format.

When it’s it up and running you can now generate a short-lived download URL for your encrypted backup file. Let’s assume that it’s S3 path is s3://heroku-secondary-backups/heroku-backup-2019-06-25_01.30.gpg. You can download it with the following command:

wget $(aws s3 presign s3://heroku-secondary-backups/heroku-backup-2019-06-25_01.30.gpg --expires-in 5) -O backup.gpgOnce you have it on your local disc you can decrypt it by running:

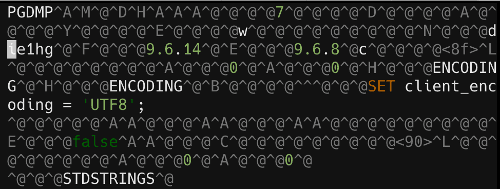

gpg --batch --yes --passphrase=$PG_BACKUP_PASSWORD -d backup.gpg | gunzip --to-stdout > backup.sql

Now you have to upload the decrypted version of a backup back to S3 bucket, use it to restore Heroku database and remove it from the bucket right after its been used. We will start with testing it out on a newly provisioned database add-on:

heroku addons:create heroku-postgresql:hobby-dev

aws s3 cp backup.sql s3://heroku-secondary-backups/backup.sql

heroku pg:backups:restore $(aws s3 presign s3://heroku-secondary-backups/backup.sql --expires-in 60) HEROKU_POSTGRESQL_GRAY_URL -a app-name

aws s3 rm s3://heroku-secondary-backups/backup.sqlYou can now check if the content of your database looks correct by logging into it and running some queries:

heroku pg:psql HEROKU_POSTGRESQL_GRAY_URLIf everything looks OK you can now restore the backup file to your production database:

aws s3 cp backup.sql s3://heroku-secondary-backups/backup.sql

heroku pg:backups:restore $(aws s3 presign s3://heroku-secondary-backups/backup.sql --expires-in 60) DATABASE_URL -a app-name

aws s3 rm s3://heroku-secondary-backups/backup.sqlAlternatively, you could promote the new database add-on as your new primary database:

heroku pg:promote HEROKU_POSTGRESQL_GRAY_URLSummary

I hope this blog post will help you secure your Heroku app data from random incidents. A secondary backup on a proprietary secure S3 bucket is the best practice that every startup and non-trivial side project should follow.

I am not too much into devops, so tips on how this tutorial could be improved are welcome.