EVM simulation engine is a core component of any competitive MEV strategy. In this tutorial, I’ll describe how to use Anvil and its lower-level counterpart REVM to detect UniswapV3 MEV arbitrage opportunities. We will implement a working proof of concept using Alloy (a successor to ethers-rs). I’ll also discuss techniques for improving the performance and scalability of REVM-based simulations.

Disclaimer: The information provided in this blog post is for educational purposes only and should not be treated as financial advice.

Calculating Uniswap V3 arbitrage profit

We will not build a full blown MEV bot in this tutorial. Instead, we will focus on a simulation engine for detecting arbitrage opportunities between Uniswap V3 pools on the Ethereum mainnet.

Uniswap V2 exchange rates are relatively straightforward to calculate off-chain (i.e., without using eth_call RPC to query smart contracts). On the contrary, Uniswap V3 is vastly more complex, with core parts written in low-level Solidity Yul assembly. Whole ebooks and hours-long YouTube series are dedicated to explaining its inner workings.

Dissecting Defi protocols is an interesting challenge in itself. But to optimize the profitability of your MEV bot, you probably want to integrate dozens of protocols. So reverse engineering all of them for off-chain calculations probably won’t scale for most MEV searchers.

Note on benchmarking methodology: You can achieve the best EVM simulation performance by running a dedicated local node. You also don’t have to worry about provider rate limiting and network latency. But, in practice, it’s not always possible to spin up full nodes for every obscure EVM chain where you want to test your MEV strategies. That’s why in the subsequent benchmarks, I include measurements for both the local full Geth node running on the same VPS and the 3rd party (Alchemy Growth Plan, i.e., without rate limiting) RPC endpoint.

Checking performance with 3rd party endpoint will highlight improvements from network-layer fixes. We will also measure a number of RPC calls triggered by different simulation strategies. Examples in the GitHub repo use publicly available Infura RPC endpoint. Measured performance usually varies ~10-20% for each example, but the described improvements are consistent with multiple executions.

If you want to test the examples with a local full Geth node, you can check my tutorial on how to run it.

Limitations of eth_call

We will calculate the arbitrage opportunity between two Uniswap V3 ETH/USDC pairs. Uniswap V3 has multiple pools for the same ERC20 token pairs, using different fees. In order to calculate the arbitrage, we have to know how much USDC we can buy for a specific WETH amount. Later we will do the same calculation for USDC amount in on the other pool to see if there’s any profit.

Uniswap V3 provides QuoterV2 contract contract that allows to calculate the exchange rate between two ERC20 tokens. This is exactly what we need to detect arbitrage opportunities. Let’s see it in action.

All the code examples are available in this GitHub repo. At the time of the last article update, I’m using Rust compiler 1.81.0. Some helper function implementations and imports are omitted in the blog post for brevity.

Let’s start by implementing Alloy helper functions for encoding and decoding quoteExactInputSingle function data.

sol! {

struct QuoteExactInputSingleParams {

address tokenIn;

address tokenOut;

uint256 amountIn;

uint24 fee;

uint160 sqrtPriceLimitX96;

}

function quoteExactInputSingle(QuoteExactInputSingleParams memory params)

public

override

returns (

uint256 amountOut,

uint160 sqrtPriceX96After,

uint32 initializedTicksCrossed,

uint256 gasEstimate

);

}

pub fn quote_calldata(token_in: Address, token_out: Address, amount_in: U256, fee: u32) -> Bytes {

let zero_for_one = token_in < token_out;

let sqrt_price_limit_x96: U160 = if zero_for_one {

"4295128749".parse().unwrap()

} else {

"1461446703485210103287273052203988822378723970341"

.parse()

.unwrap()

};

let params = QuoteExactInputSingleParams {

tokenIn: token_in,

tokenOut: token_out,

amountIn: amount_in,

fee: U24::from(fee),

sqrtPriceLimitX96: sqrt_price_limit_x96,

};

Bytes::from(quoteExactInputSingleCall { params }.abi_encode())

}

pub fn decode_quote_response(response: Bytes) -> Result<u128> {

let (amount_out, _, _, _) = <(u128, u128, u32, u128)>::abi_decode(&response, false)?;

Ok(amount_out)

}

Alloy features this fancy sol! macro for embedding Solidity code directly into Rust. No more dumping unreadable JSON ABIs blobs in your Rust project! As you can see, it can also handle complex data types like structs. Data encoding and decoding is compile time safe.

quote_calldata method returns bytes encoded calldata for our quoting function. sqrt_price_limit_x96 represents price limit that we are willing to pay. However, since our MEV statergy assumes that we will be the first to interact with pool in the new block, we use MIN/MAX values (depending on which token we exchange).

decode_quote_response decodes the output of the quoteExactInputSingle method and returns amount_out i.e., the value we’re interested in.

Getting UniswapV3 quote using eth_call

Now, let’s do the actual eth_call call. Here’s a working example:

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let provider = ProviderBuilder::new().on_http(std::env::var("ETH_RPC_URL").unwrap().parse()?);

let provider = Arc::new(provider);

let base_fee = provider.get_gas_price().await?;

let volume = one_ether().div(U256::from(10));

let calldata = quote_calldata(weth_addr(), usdc_addr(), volume, 3000);

let tx = build_tx(official_quoter_addr(), me(), calldata, base_fee);

let start = measure_start("eth_call_one");

let call = provider.call(&tx).await?;

let amount_out = decode_quote_response(call)?;

println!("{} WETH -> USDC {}", volume, amount_out);

measure_end(start);

Ok(())

}

We instantiate the Alloy HTTP provider from the RPC connection URL. Then, we fetch the current gas price and pass it to the build_tx helper function together with calldata from the quote_calldata method. Later, we use a static eth_call using the provider.call method and decode it with decode_quote_response.

You can run it by typing:

cargo run --bin eth_call_one --release

# 100000000000000000 WETH -> USDC 294930306

# Elapsed: 9.59ms for 'eth_call_one' (F)

# Elapsed: 52.62ms for 'eth_call_one' (A)

(F) suffix for measurements done with local full node and (A) for 3rd party Alchemy node.Based on the output, it looks like we can buy ~$295 for 0.1 ETH at the time of writing. A single eth_call takes ~10ms on a full local node and is ~5x slower using 3rd party provider.

Now run the example like that:

RUST_LOG=debug cargo run --bin eth_call --release

Since we’ve enabled logging, you should see similar output:

# DEBUG [alloy_rpc_client::call] sending request method=eth_call id=1

It means that our script triggered a single RPC eth_call request. It will come in handy later!

Sending multiple eth_call requests

To calculate optimal arbitrage, you usually have to test different volumes for each token pair. Let’s see a working example and measure its performance:

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let provider = ProviderBuilder::new().on_http(std::env::var("ETH_RPC_URL").unwrap().parse()?);

let provider = Arc::new(provider);

let base_fee = provider.get_gas_price().await?;

let volumes = volumes(U256::ZERO, one_ether().div(U256::from(10)), 100);

let start = measure_start("eth_call");

for (index, volume) in volumes.into_iter().enumerate() {

let calldata = quote_calldata(weth_addr(), usdc_addr(), volume, 3000);

let tx = build_tx(official_quoter_addr(), me(), calldata, base_fee);

let response = provider.call(&tx).await?;

let amount_out = decode_quote_response(response)?;

if index % 20 == 0 {

println!("{} WETH -> USDC {}", volume, amount_out);

}

}

measure_end(start);

Ok(())

}

In this example, we use the volumes helper function to generate 100 evenly spread volumes between 0 and 0.1 ETH. Later, we loop through all these volumes to get quotes. Let’s see our benchmarks:

cargo run --bin eth_call --release

# Elapsed: 89.45ms for 'eth_call' (F)

# Elapsed: 4.39s for 'eth_call' (A)

You can see that for multiple RPC calls networking overhead becomes significant. Using 3rd party provider is now ~50x slower. I’m using a VPS server in the US geolocation, i.e. same as Alchemy nodes. If calls had to travel accross the ocean then the slowdown would be even greater.

If we run this example with RUST_LOG=debug you should see the following output:

# DEBUG [alloy_rpc_client::call] sending request method=eth_call id=100

It means that we triggered 100 RPC calls, 1 for each volume. There are ways to speed up this example by running requests in parallel. But it does not change the fact that in this way we’re spamming our node with RPC calls. Some 3rd party providers are likely to apply rate limiting so you’d have to throttle your bot to utilize this approach. In addition, continuously spamming your local node with RPC calls might degrade its performance and make it lag behind the blockchain head.

Let’s see how we can improve this example by leveraging Anvil.

How to use Anvil in Rust?

Anvil is one of the tools gifted to us by The Ripped Jesus. It can be used to fork EVM chains and run them locally. Let’s see how we can use it directly in Rust to optimize our previous example.

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let rpc_url: Url = std::env::var("ETH_RPC_URL").unwrap().parse()?;

let provider = ProviderBuilder::new().on_http(rpc_url.clone());

let provider = Arc::new(provider);

let base_fee = provider.get_gas_price().await?;

let fork_block = provider.get_block_number().await?;

let anvil = Anvil::new()

.fork(rpc_url)

.fork_block_number(fork_block)

.block_time(1_u64)

.spawn();

let anvil_provider = ProviderBuilder::new().on_http(anvil.endpoint().parse().unwrap());

let anvil_provider = Arc::new(anvil_provider);

let volumes = volumes(U256::ZERO, one_ether().div(U256::from(10)), 100);

let start = measure_start("anvil_first");

let first_volume = volumes[0];

let calldata = quote_calldata(weth_addr(), usdc_addr(), first_volume, 3000);

let tx = build_tx(official_quoter_addr(), me(), calldata, base_fee);

let response = anvil_provider.call(&tx).await?;

let amount_out = decode_quote_response(response)?;

println!("{} WETH -> USDC {}", first_volume, amount_out);

measure_end(start);

let start = measure_start("anvil");

for (index, volume) in volumes.into_iter().enumerate() {

let calldata = quote_calldata(weth_addr(), usdc_addr(), volume, 3000);

let tx = build_tx(official_quoter_addr(), me(), calldata, base_fee);

let response = anvil_provider.call(&tx).await?;

let amount_out = decode_quote_response(response)?;

if index % 20 == 0 {

println!("{} WETH -> USDC {}", volume, amount_out);

}

}

measure_end(start);

drop(anvil);

Ok(())

}

This time, setup is more complex because we have to instantiate both provider and anvil_provider. anvil_provider will talk directly to the Anvil running on a local port. It’s good practice to call drop on anvil instance to kill the process. If we did more work in this method, it would linger around eating up server resources.

The rest of the code is similar to our previous examples, but we measure the performance of the first call separately from the remaining 99. Can you guess why? Let’s see the results of our benchmark:

# Elapsed: 11.22ms for 'anvil_first' (F)

# Elapsed: 759.82ms for 'anvil_first' (A)

# Elapsed: 109.44ms for 'anvil' (F)/(A)

For Alchemy the 1st calculation took ~700ms but remaining 99 only 100ms. Full node was ~70x faster for the first calculation but remaining 99 were similar for both RPC types. What’s going on?

Anvil works by implicitly fetching necessary data on demand. It means that first call had to talk to our source RPC node and issue multiple requests (eth_getCode, eth_getStorageAt, eth_getBalance) to seed data needed for our simulation. That is why we’ve arranged volumes in descending order. Largest Uniswap V3 swaps need the most data (of so-called “ticks”).

To calculate the number of requests triggered by Anvil you have to use RUST_LOG=trace. For our example, I’ve found 36 instances of sending request. In addition there were still 100 sending request method=eth_call, just like in the previous example. But these requests were targetting our local Anvil proces, i.e., not spamming our origin full node.

By utilizing Anvil, we’ve reduced the number of external RPC calls from 100 -> 36. As a result, we’ve improved the total execution time for Alchemy from ~5s to ~1s (5x better!), but performance for a local node is now ~30% worse (~90ms -> ~120ms)

Let’s see how we can take this optimization further by going lower-level with REVM.

How to use REVM for MEV simulations?

REVM is a Rust implementation of the Ethereum execution layer. Both Foundry (including Anvil) and RETH use it under the hood. Let’s see how we can rewrite our previous example with REVM.

pub type AlloyCacheDB = CacheDB<AlloyDB<Http<Client>, Ethereum, Arc<RootProvider<Http<Client>>>>>;

pub fn revm_call(

from: Address,

to: Address,

calldata: Bytes,

cache_db: &mut AlloyCacheDB,

) -> Result<Bytes> {

let mut evm = Evm::builder()

.with_db(cache_db)

.modify_tx_env(|tx| {

tx.caller = from;

tx.transact_to = TransactTo::Call(to);

tx.data = calldata;

tx.value = U256::from(0);

})

.build();

let ref_tx = evm.transact().unwrap();

let result = ref_tx.result;

let value = match result {

ExecutionResult::Success {

output: Output::Call(value),

..

} => value,

result => {

return Err(anyhow!("execution failed: {result:?}"));

}

};

Ok(value)

}

pub fn init_cache_db(provider: Arc<RootProvider<Http<Client>>>) -> AlloyCacheDB {

CacheDB::new(AlloyDB::new(provider, Default::default()))

}

We start by implementing the revm_call helper method. It’s a standard boilerplate for executing REVM transactions. We execute ETH call based on provider from, to addresses and calldata.

evm.transact().unwrap(); does the actual transaction execution without persisting changes to the database (similar to eth_call). If you want to test a sequence of transactions, you have to use evm.transact_commit().unwrap();

cache_db with the verbose type annotation represents an object for REVM data storage and retrieval. We initialize it using the init_cache_db helper method. It accepts a standard RPC provider. This setup allows it to implicitly fetch data needed for simulations, exactly like Anvil.

Let’s now implement the rest of the example:

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let provider = ProviderBuilder::new().on_http(std::env::var("ETH_RPC_URL").unwrap().parse()?);

let provider = Arc::new(provider);

let volumes = volumes(U256::ZERO, one_ether().div(U256::from(10)), 100);

let mut cache_db = init_cache_db(provider.clone());

let start = measure_start("revm_first");

let first_volume = volumes[0];

let calldata = quote_calldata(weth_addr(), usdc_addr(), first_volume, 3000);

let response = revm_call(me(), official_quoter_addr(), calldata, &mut cache_db)?;

let amount_out = decode_quote_response(response)?;

println!("{} WETH -> USDC {}", first_volume, amount_out);

measure_end(start);

let start = measure_start("revm");

for (index, volume) in volumes.into_iter().enumerate() {

let calldata = quote_calldata(weth_addr(), usdc_addr(), volume, 3000);

let response = revm_call(me(), official_quoter_addr(), calldata, &mut cache_db)?;

let amount_out = decode_quote_response(response)?;

if index % 20 == 0 {

println!("{} WETH -> USDC {}", volume, amount_out);

}

}

measure_end(start);

Ok(())

}

This should already look familiar. Instead of provider.call we use the previously defined revm_call method. Let’s see the benchmark results:

cargo run --bin revm --release

# Elapsed: 10.76ms for 'revm_first' # (F)

# Elapsed: 935.84ms for 'revm_first' (A)

# Elapsed: 70.11ms for 'revm' (F)/(A)

Results are similar to Anvil. The first Alchemy call took around a second to fetch necessary data, and subsequent 99 iterations were ~30% faster. These results make a lot of sense. Anvil uses REVM under the hood. So we can save these ~30% by eliminating cross-process network overhead.

If we enable RUST_LOG=debug we can see the following output:

# ...

# DEBUG [alloy_rpc_client::call] sending request method=eth_getBalance id=31

# DEBUG [alloy_rpc_client::call] sending request method=eth_getCode id=32

# DEBUG [alloy_rpc_client::call] sending request method=eth_getStorageAt id=33

I.e., 33 RPC requests in total. It’s also reasonable since, regardless of the tool used for simulation, we have to fetch the same data from the origin node.

Is that it? Have we reached the limit of REVM performance and EVM simulations scalability?

Stay tuned. In the next section, we will go even deeper into REVM and EVM internals.

How to improve REVM performance?

Cacache all the bytecodes

In the RPC logs output we can currently count eight eth_getCode calls. eth_getCode downloads bytecode of a target smart contract. Bytecode is defined during a smart contract deployment. Even seemingly “mutable” smart contarcts like UDSC use a proxy pattern which delegates calls to a new address via changing implementation slot. Bytecode never changes.

In addition to eth_getCode I can also see eight instances of eth_getBalance and eth_getTransactionCount in the logs output. ETH balances and nonces are not relevant to our simulation. But REVM fetches them by default using basic_ref method of AlloyDB.

Here’s a helper method for improving the performance of REVM smart contracts initialization (in case nonces and ETH balance are not relevant):

pub async fn init_account(

address: Address,

cache_db: &mut AlloyCacheDB,

provider: Arc<RootProvider<Http<Client>>>,

) -> Result<()> {

let cache_key = format!("bytecode-{:?}", address);

let bytecode = match cacache::read(&cache_dir(), cache_key.clone()).await {

Ok(bytecode) => {

let bytecode = Bytes::from(bytecode);

Bytecode::new_raw(bytecode)

}

Err(_e) => {

let bytecode = provider.get_code_at(address).await?;

let bytecode_result = Bytecode::new_raw(bytecode.clone());

let bytecode = bytecode.to_vec();

cacache::write(&cache_dir(), cache_key, bytecode.clone()).await?;

bytecode_result

}

};

let code_hash = bytecode.hash_slow();

let acc_info = AccountInfo {

balance: U256::ZERO,

nonce: 0_u64,

code: Some(bytecode),

code_hash,

};

cache_db.insert_account_info(address, acc_info);

Ok(())

}

We use cacache-rs crate to cache bytecode of all the affected addresses. Unquestionable advantage of blockchain immutability is that you don’t have to worry about cache eviction policies. Cacache uses file storage so you can aggresively cache all the necessary data.

We mock AccountInfo by with zero values for balance and nonce. As we’ve discussed in our case it does not affect the results. But, if you start using caching and mocking always make sure to compare the results of your simulations with standard eth_call.

This approach further reduces number of RPC calls, but we can still do better.

I’ll provide a full working example with caching applied after all the fixes are completed.

Mocking smart contracts bytecode

The next optimization will require us to dig into the source code of the QuoterV2 contract. In brief, it calls the swap method on the UniswapV3Pool contract, which matches the provided token addresses and fee. swap does some maffs magic to calculate the amount out. An interesting takeaway is that swap calls only the balanceOf and transferFrom method of selected ERC20 tokens. It means that we don’t usually need the original ERC20 implementation to make it work. It’s even more impactful since we’re using the UDSC token that fetches two bytecodes (proxy and implementation) and implementation slot.

Please be aware that mocking ERC20 bytecode will break simulations for tokens that use a custom fee on transfer mechanism. It will also make your gas estimates less acurate. But, improving performance is usually a matter of tradeoffs, and I wanted to show different available techniques.

Here’s a helper method for instantiating smart contracts with mocked bytecodes:

pub async fn init_account_with_bytecode(

address: Address,

bytecode: Bytecode,

cache_db: &mut AlloyCacheDB,

) -> Result<()> {

let code_hash = bytecode.hash_slow();

let acc_info = AccountInfo {

balance: U256::ZERO,

nonce: 0_u64,

code: Some(bytecode),

code_hash,

};

cache_db.insert_account_info(address, acc_info);

Ok(())

}

We can further improve performance by mocking the bytecode balances of our ERC20 tokens. Why can we mock balances? Here’s the relevant Solidity code from UniswapV3Pool#swap implementation:

if (zeroForOne) {

if (amount1 < 0) TransferHelper.safeTransfer(token1, recipient, uint256(-amount1));

uint256 balance0Before = balance0();

IUniswapV3SwapCallback(msg.sender).uniswapV3SwapCallback(amount0, amount1, data);

require(balance0Before.add(uint256(amount0)) <= balance0(), 'IIA');

} else {

if (amount0 < 0) TransferHelper.safeTransfer(token0, recipient, uint256(-amount0));

uint256 balance1Before = balance1();

IUniswapV3SwapCallback(msg.sender).uniswapV3SwapCallback(amount0, amount1, data);

require(balance1Before.add(uint256(amount1)) <= balance1(), 'IIA');

}

It checks a balance change from before and after the transfer. This means that absolute values are not relevant. By mocking pool balances we can reduce two eth_getStorageAt calls.

And that’s how you can mock a mapping storage slot:

pub async fn insert_mapping_storage_slot(

contract: Address,

slot: U256,

slot_address: Address,

value: U256,

cache_db: &mut AlloyCacheDB,

) -> Result<()> {

let hashed_balance_slot = keccak256((slot_address, slot).abi_encode());

cache_db.insert_account_storage(contract, hashed_balance_slot.into(), value)?;

Ok(())

}

It implements the Solidity convention where a mapping key storage slot is a keccak256 of a mapping storage slot and key value.

When you start tweaking storage slots, then slither becomes an indispensable tool. You can use the following command to display all the storage slots of a target smart contract:

slither-read-storage 0xc02aaa39b223fe8d0a0e5c4f27ead9083c756cc2

# INFO:Slither-read-storage:

# Name: name

# Type: string

# Slot: 0

# INFO:Slither-read-storage:

# Contract 'WETH9'

# WETH9.symbol with type string is located at slot: 1

# INFO:Slither-read-storage:

# Name: symbol

# Type: string

# Slot: 1

#...

I recommend asdf for easy switching between local Solidity versions. You can read my other tutorial for more info about it.

Another useful trick is to investigate the contents of your REVM database. You can do it like that:

dbg!(&cache_db.accounts[&weth_addr()]);

// [src/revm.rs:32:5] &cache_db.accounts[&weth_addr()] = DbAccount {

// info: AccountInfo {

// balance: 0x0000000000000000000000000000000000000000000287660c4c202d84e43435_U256,

// nonce: 1,

// code_hash: 0xd0a06b12ac47863b5c7be4185c2deaad1c61557033f56c7d4ea74429cbb25e23,

// code: Some(

// LegacyRaw(

// 0x6060604052600436106100af576...

// ...

// ),

// ),

// },

// account_state: None,

// storage: {

// 0xfc581e2e1d759407b26acc35e3d0231aeae791f35404c37eeed17c8cdf81bcfd_U256: 0x00000000000000000000000000000000000000000000042a2f89c0b26f60c419_U256,

// },

You can quickly peek at bytecodes and values of storage slots, so it’s invaluable for debugging sessions.

Measuring performance improvement

Here’s a working example with the above fixes applied:

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let provider = ProviderBuilder::new().on_http(std::env::var("ETH_RPC_URL").unwrap().parse()?);

let provider = Arc::new(provider);

let volumes = volumes(U256::ZERO, one_ether().div(U256::from(10)), 100);

let mut cache_db = init_cache_db(provider.clone());

init_account(official_quoter_addr(), &mut cache_db, provider.clone()).await?;

init_account(pool_3000_addr(), &mut cache_db, provider.clone()).await?;

let mocked_erc20 = include_str!("bytecode/generic_erc20.hex");

let mocked_erc20 = Bytes::from_str(mocked_erc20).unwrap();

let mocked_erc20 = Bytecode::new_raw(mocked_erc20);

init_account_with_bytecode(weth_addr(), mocked_erc20.clone(), &mut cache_db).await?;

init_account_with_bytecode(usdc_addr(), mocked_erc20.clone(), &mut cache_db).await?;

let mocked_balance = U256::MAX.div(U256::from(2));

insert_mapping_storage_slot(

weth_addr(),

U256::ZERO,

pool_3000_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

insert_mapping_storage_slot(

usdc_addr(),

U256::ZERO,

pool_3000_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

let start = measure_start("revm_cached_first");

let first_volume = volumes[0];

let calldata = quote_calldata(weth_addr(), usdc_addr(), first_volume, 3000);

let response = revm_call(me(), official_quoter_addr(), calldata, &mut cache_db)?;

let amount_out = decode_quote_response(response)?;

println!("{} WETH -> USDC {}", first_volume, amount_out);

measure_end(start);

let start = measure_start("revm_cached");

for (index, volume) in volumes.into_iter().enumerate() {

let calldata = quote_calldata(weth_addr(), usdc_addr(), volume, 3000);

let response = revm_call(me(), official_quoter_addr(), calldata, &mut cache_db)?;

let amount_out = decode_quote_response(response)?;

if index % 20 == 0 {

println!("{} WETH -> USDC {}", volume, amount_out);

}

}

measure_end(start);

Ok(())

}

Let’s run it:

cargo run --bin revm_cached --release

# Elapsed: 3.92ms for 'revm_cached_first' (F)

# Elapsed: 387.88ms for 'revm_cached_first' (A)

# Elapsed: 72.23ms for 'revm_cached' (F)/(A)

You can see that we’re down ~60% for initial data fetch for both Alchemy and full node. In the case of the full node, this translates to a 6ms improvement, but for Alchemy, we’ve shaved off over 500ms! As expected, execution time for the remaining 99 calls was not impacted.

RUST_LOG=debug indicates that we are now down to ten RPC requests compared to the starting 33:

# DEBUG [alloy_rpc_client::call] sending request method=eth_getStorageAt id=10

A side effect of mocking the UDSC proxy token is that gas usage for our simulation method is down to 112662 from 123410 i.e., ~10% drop. I suspect it’s because delegate calls are more gas expensive than direct ones.

You can check simulations gas usage by dbg!-ing result of evm.transact().unwrap(); in revm_call method.

Similar mocking/caching tricks are also possible with Anvil. It exposes custom RPC method e.g. anvil_setStorageAt and anvil_setCode. But, if you find yourself tweaking storage slots and bytecodes I’d recommend switching to REVM because of its better execution performance.

Our simulation is now significantly faster than when we started and uses ~90% fewer RPC calls.

But, there’s One More Thing.

Custom quoter smart contract for Uniswap V3 simulations

It’s high time to write some Solidity code. The official QuoterV2 is a great starting point for getting the output amounts. But I’ve noticed that its performance could be more optimal. Let’s reimplement our custom quoter to see if we can do better:

pragma solidity ^0.8.0;

interface IUniV3Pool {

function swap(

address recipient,

bool zeroForOne,

int256 amountSpecified,

uint160 sqrtPriceLimitX96,

bytes calldata data

) external returns (int256 amount0, int256 amount1);

}

contract UniV3Quoter {

receive() external payable {}

function uniswapV3SwapCallback(

int256 amount0Delta,

int256 amount1Delta,

bytes calldata _data

) external {

revert(string(abi.encode(amount0Delta, amount1Delta)));

}

function getAmountOut(

address pool,

bool zeroForOne,

uint256 amountIn

) external {

uint160 sqrtPriceLimitX96 = (

zeroForOne

? 4295128749

: 1461446703485210103287273052203988822378723970341

);

IUniV3Pool(pool).swap(

address(1),

zeroForOne,

int256(amountIn),

sqrtPriceLimitX96,

""

);

}

}

This contract reimplements the core part of logic from QuoterV2. It tries to execute a swap on target UniswapV3Pool and implements the required uniswapV3SwapCallback callback method. After the calculations the pool triggers this method with amount0Delta and amount1Delta representing amounts in and out for respective tokens. This is precisely the info we need, so we do a revert with this data encoded. Solidity bubbles the revert up the caller where we can retrieve it. Let’s see it in action.

We start by implementing the REVM helper method for reading data from reverted transactions:

pub fn revm_revert(

from: Address,

to: Address,

calldata: Bytes,

cache_db: &mut AlloyCacheDB,

) -> Result<Bytes> {

let mut evm = Evm::builder()

.with_db(cache_db)

.modify_tx_env(|tx| {

tx.caller = from;

tx.transact_to = TransactTo::Call(to);

tx.data = calldata;

tx.value = U256::from(0);

})

.build();

let ref_tx = evm.transact().unwrap();

let result = ref_tx.result;

let value = match result {

ExecutionResult::Revert { output: value, .. } => value,

_ => {

panic!("It should never happen!");

}

};

Ok(value)

}

This is similar to what we’ve seen before in revm_call. But instead of returning bytes from a successfully executed call, we expect a revert and parse the data from it. Here’s a helper method for extracting the output amount that we need:

pub fn decode_get_amount_out_response(response: Bytes) -> Result<u128> {

let value = response.to_vec();

let last_64_bytes = &value[value.len() - 64..];

let (a, b) = match <(i128, i128)>::abi_decode(last_64_bytes, false) {

Ok((a, b)) => (a, b),

Err(e) => return Err(anyhow::anyhow!("'getAmountOut' decode failed: {:?}", e)),

};

let value_out = std::cmp::min(a, b);

let value_out = -value_out;

Ok(value_out as u128)

}

We take the last 64 bytes and decode it to two i128 integers. A smaller (negative) value is the amount out, so we negate and return it.

Let’s finally see our working example:

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let provider = ProviderBuilder::new().on_http(std::env::var("ETH_RPC_URL").unwrap().parse()?);

let provider = Arc::new(provider);

let volumes = volumes(U256::ZERO, one_ether().div(U256::from(10)), 100);

let mut cache_db = init_cache_db(provider.clone());

init_account(me(), &mut cache_db, provider.clone()).await?;

init_account(pool_3000_addr(), &mut cache_db, provider.clone()).await?;

let mocked_erc20 = include_str!("bytecode/generic_erc20.hex");

let mocked_erc20 = Bytes::from_str(mocked_erc20).unwrap();

let mocked_erc20 = Bytecode::new_raw(mocked_erc20);

init_account_with_bytecode(weth_addr(), mocked_erc20.clone(), &mut cache_db).await?;

init_account_with_bytecode(usdc_addr(), mocked_erc20.clone(), &mut cache_db).await?;

let mocked_balance = U256::MAX.div(U256::from(2));

insert_mapping_storage_slot(

weth_addr(),

U256::ZERO,

pool_3000_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

insert_mapping_storage_slot(

usdc_addr(),

U256::ZERO,

pool_3000_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

let mocked_custom_quoter = include_str!("bytecode/uni_v3_quoter.hex");

let mocked_custom_quoter = Bytes::from_str(mocked_custom_quoter).unwrap();

let mocked_custom_quoter = Bytecode::new_raw(mocked_custom_quoter);

init_account_with_bytecode(custom_quoter_addr(), mocked_custom_quoter, &mut cache_db).await?;

let start = measure_start("revm_quoter_first");

let first_volume = volumes[0];

let calldata =

get_amount_out_calldata(pool_3000_addr(), weth_addr(), usdc_addr(), first_volume);

let response = revm_revert(me(), custom_quoter_addr(), calldata, &mut cache_db)?;

let amount_out = decode_get_amount_out_response(response)?;

println!("{} WETH -> USDC {}", first_volume, amount_out);

measure_end(start);

let start = measure_start("revm_quoter");

for (index, volume) in volumes.into_iter().enumerate() {

let calldata = get_amount_out_calldata(pool_3000_addr(), weth_addr(), usdc_addr(), volume);

let response = revm_revert(me(), custom_quoter_addr(), calldata, &mut cache_db)?;

let amount_out = decode_get_amount_out_response(response)?;

if index % 20 == 0 {

println!("{} WETH -> USDC {}", volume, amount_out);

}

}

measure_end(start);

Ok(())

}

This is similar to the revm_cached example so let me highlight just the differences. mocked_custom_quoter is used to insert a mocked bytecode of our UniV3Quoter contract, so that we don’t have to deploy it. Rest of the example is identical but we use revm_revert instead of revm_call, and different methods for encoding and decoding calldata.

Was this additional effort and custom smart contract worth it? Let’s see our benchmark:

cargo run --bin revm_quoter --release

# Elapsed: 3.59ms for 'revm_quoter_first' (F)

# Elapsed: 389.13ms for 'revm_quoter_first' (A)

# Elapsed: 15.81ms for 'revm_quoter'

The initial step of fetching data was not affected. But, subsequent 99 calls are ~4x faster at ~15ms down from ~70ms. I have no idea why this implementation is so much better. One difference is that the official quoter computes the pool address for the provided token, but in our version, we provide it as a parameter. Gas usage is at 90966 compared to 112662 from the previous example, i.e., ~20% reduction. So, it does not explain this drastic speed improvement. But I’m usually banging my head against the wall because something is unacceptably slow, not fast. So, I can live with that.

Another significant advantage of our custom quoter is that, contrary to the official one, it works with UniswapV3 clones.

Compared to previous optimization methods, this one is specific to UniswapV3. But, if you’re using smart contracts for simulations, then poking through the source code to identify the core of the business logic and rewriting it with, e.g., security checks omitted, might yield significant improvements.

Summary of EVM simulation performance improvments

I don’t know if you remember, but I’ve promised to calculate the Uniswap V3 arbitrage. So far, we’ve been calculating output amounts only for a single pool. Let’s now quickly recap the performance improvements we’ve applied along the way, and the final example will come soon after.

Let’s first recap execution time and RPC calls for the initial simulation, which fetches the necessary data:

(F) - local full node, (A) 3rd party Alchemy node):| RPC calls | Execution time (ms) | |

|---|---|---|

| eth_call_one (F) | 1 | 9ms |

| eth_call_one (A) | 1 | 52ms |

| anvil_first (F) | 36 | 10ms |

| anvil_first (A) | 36 | 759ms |

| revm_first (F) | 33 | 10ms |

| revm_cached_first (F) | 10 | 4ms |

| revm_cached_first (A) | 10 | 387ms |

Now let’s see metrics for the subsequent 99 calls after the initial data seed:

| RPC calls | Execution time (ms) | |

|---|---|---|

| eth_call (F) | 99 | 87ms |

| eth_call (A) | 99 | 4280ms |

| anvil (A)/(F) | 0 | 109ms |

| revm (A)/(F) | 0 | 65ms |

| revm_quoter (A)/(F) | 0 | 16ms |

And now, to get the complete overview, let’s see these two tables combined:

| RPC calls | Execution time (ms) | |

|---|---|---|

| eth_call (F) | 100 | 89ms |

| eth_call (A) | 100 | 4390ms |

| anvil (F) | 36 | 120ms |

| anvil (A) | 36 | 868ms |

| revm (F) | 33 | 80ms |

| revm (A) | 33 | 1010ms |

| revm_cached (F) | 10 | 76ms |

| revm_cached (A) | 10 | 459ms |

| revm_quoter (F) | 10 | 19ms |

| revm_quoter (A) | 10 | 405ms |

You can see that for Alchemy node the worst case for 100 simulations is over 4 seconds and 100 RPC calls, that we’ve managed to reduce down to 10 RPC calls and 400ms. I.e. ~10x improvement for both performance and reduction in RPC calls.

For the local full node, we managed to reduce execution time from 90ms to 19ms and RPC calls from 100 to 10.

You can run revm_validate to check that despite all the revm/mocking/caching/quoter shenanigans, simulation results are identical to a standard eth_call.

cargo run --bin revm_validate --release

# ...

# 15000000000000000 WETH -> USDC REVM 45146998 ETH_CALL 45146998

# 14000000000000000 WETH -> USDC REVM 42137199 ETH_CALL 42137199

# 13000000000000000 WETH -> USDC REVM 39127399 ETH_CALL 39127399

# ...

How to calculate arbitrage between two UniswapV3 pairs?

Finally!!! Here’s a promised Uniswap V3 arbitrage detection that uses all the above techniques:

#[tokio::main]

async fn main() -> Result<()> {

env_logger::init();

let provider = ProviderBuilder::new().on_http(std::env::var("ETH_RPC_URL").unwrap().parse()?);

let provider = Arc::new(provider);

let volumes = volumes(U256::ZERO, one_ether().div(U256::from(10)), 100);

let mut cache_db = init_cache_db(provider.clone());

init_account(me(), &mut cache_db, provider.clone()).await?;

init_account(pool_3000_addr(), &mut cache_db, provider.clone()).await?;

init_account(pool_500_addr(), &mut cache_db, provider.clone()).await?;

let mocked_erc20 = include_str!("bytecode/generic_erc20.hex");

let mocked_erc20 = Bytes::from_str(mocked_erc20).unwrap();

let mocked_erc20 = Bytecode::new_raw(mocked_erc20);

init_account_with_bytecode(weth_addr(), mocked_erc20.clone(), &mut cache_db).await?;

init_account_with_bytecode(usdc_addr(), mocked_erc20.clone(), &mut cache_db).await?;

let mocked_balance = U256::MAX.div(U256::from(2));

insert_mapping_storage_slot(

weth_addr(),

U256::ZERO,

pool_3000_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

insert_mapping_storage_slot(

usdc_addr(),

U256::ZERO,

pool_3000_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

insert_mapping_storage_slot(

weth_addr(),

U256::ZERO,

pool_500_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

insert_mapping_storage_slot(

usdc_addr(),

U256::ZERO,

pool_500_addr(),

mocked_balance,

&mut cache_db,

)

.await?;

let mocked_custom_quoter = include_str!("bytecode/uni_v3_quoter.hex");

let mocked_custom_quoter = Bytes::from_str(mocked_custom_quoter).unwrap();

let mocked_custom_quoter = Bytecode::new_raw(mocked_custom_quoter);

init_account_with_bytecode(custom_quoter_addr(), mocked_custom_quoter, &mut cache_db).await?;

for volume in volumes.into_iter() {

let calldata = get_amount_out_calldata(pool_500_addr(), weth_addr(), usdc_addr(), volume);

let response = revm_revert(me(), custom_quoter_addr(), calldata, &mut cache_db)?;

let usdc_amount_out = decode_get_amount_out_response(response)?;

let calldata = get_amount_out_calldata(

pool_3000_addr(),

usdc_addr(),

weth_addr(),

U256::from(usdc_amount_out),

);

let response = revm_revert(me(), custom_quoter_addr(), calldata, &mut cache_db)?;

let weth_amount_out = decode_get_amount_out_response(response)?;

println!(

"{} WETH -> USDC {} -> WETH {}",

volume, usdc_amount_out, weth_amount_out

);

let weth_amount_out = U256::from(weth_amount_out);

if weth_amount_out > volume {

let profit = weth_amount_out - volume;

println!("WETH profit: {}", profit);

} else {

println!("No profit.");

}

}

Ok(())

}

Most of the time there’s no arbitrage opportunity, but occasionally when testing I’ve seen similar results:

cargo run --bin revm_arbitrage --release

# 100000000000000 WETH -> USDC 291455 -> WETH 100540477154459

# Profit: 540477154459 WETH

It means that there’s a minimal arbitrage profit, smaller than the gas cost needed to make the two-legged swap. If you’re into the MEV game, it should not be a surprise. Popular Mainnet pools are constantly arbitraged down to a single cent of profit by the mempool-aware bots.

All this effort just to learn that there’s no real profit to be made. I hope you don’t hate me now.

You can improve this example by checking which market has a better buy price and calculating arbitrage whenever any of the market states changes based on the event log stream. But this is a story for another blog post.

Summary

You’ve made it to the end of the longest post in my 5+ years of writing. Congrats!

We’ve demonstrated that REVM allows integration of the nontrivial Defi protocol with only a superficial understanding of its inner workings. I still have no idea how Uniswap V3 “tick ranges” work. But using the described calculations, I was able to score some arbitrage profits from UniV3 pools.

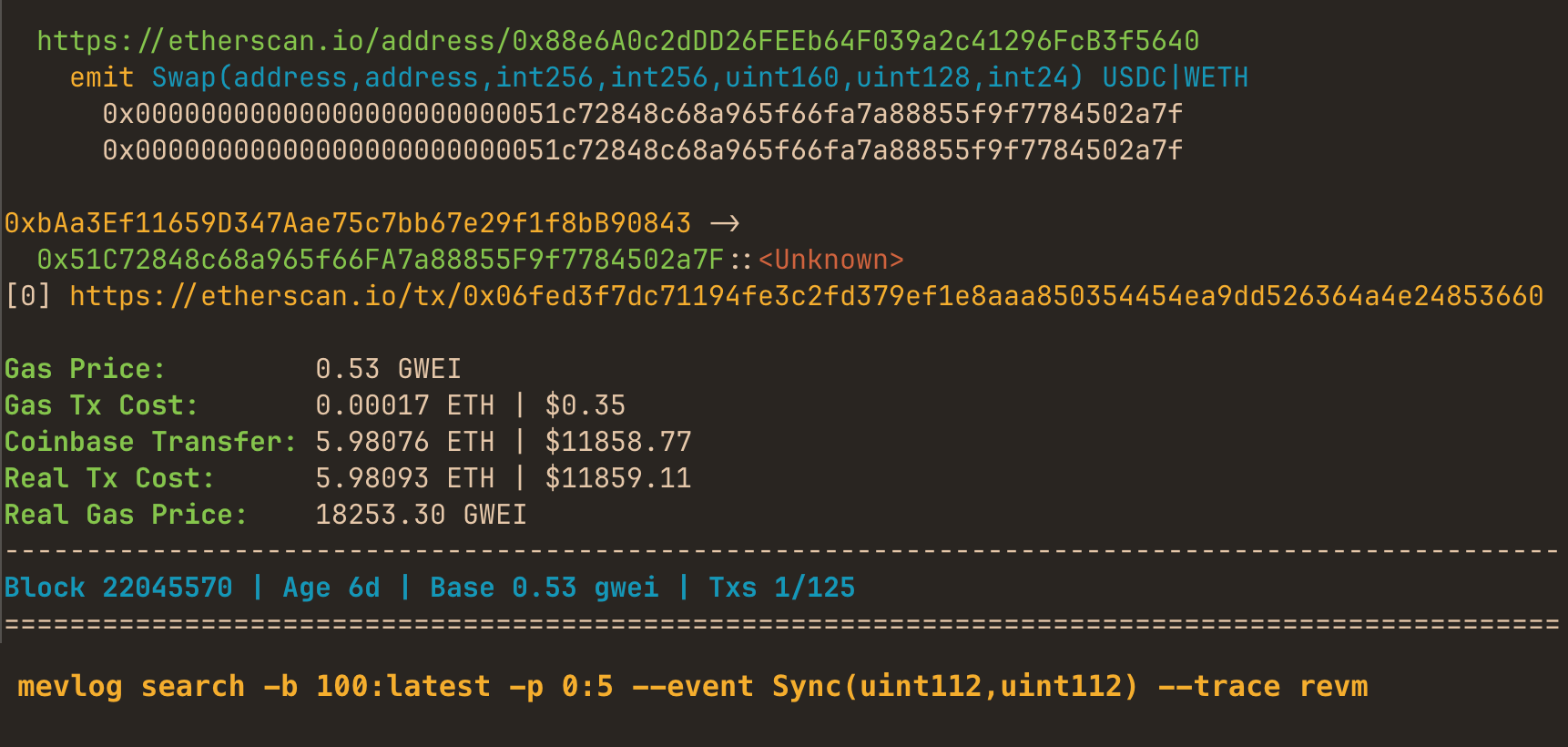

[Update] If you’re interested in Revm and MEV check this article showcasing mevlog-rs a Rust CLI tool for querying blockchain and discovering long-tail MEV opportunities.

The examples are based on what I’m using in production, so I’ve verified that these techniques work. But, I’m just starting to explore the lower layers of the blockchain ecosystem. So corrections and PRs to the GitHub repo with examples are very welcome.