Scaling issues are great because it means that you have traffic on your website. Before you decide that “Rails doesn’t scale!” and start a rewrite to a tech stack with potentially more performance, maybe you should double check queries that your PostgreSQL database executes. Below I describe some tools and techniques you can use to track down, diagnose and optimize slow Active Record SQL query execution.

Problem with Active Record

I’ve started my programming career developing Rails apps. Thanks to Active Record I could start getting stuff done right away.

Active Record lets you write fairly complex database queries without understanding the underlying SQL. It is its best feature and the greatest sin at the same time.

Below I present a list of tools and techniques I use to tackle database queries in Rails apps. Getting familiar with them can help you understand SQL on a much lower level than an Active Record APIs.

Track down performance bottlenecks

I usually use Rails PG Extras and PgHero to track down query bottlenecks in my applications.

Rails PG Extras

Rails PG Extras is a Ruby gem that I’ve recently published. It provides the same features as Heroku PG Extras plugin, for Rails apps that are not using the default Heroku PostgreSQL plugin. You can read this blog post for a detailed step-by-step tutorial on how to use PGExtras to identify and fix PostgreSQL issues.

PgHero

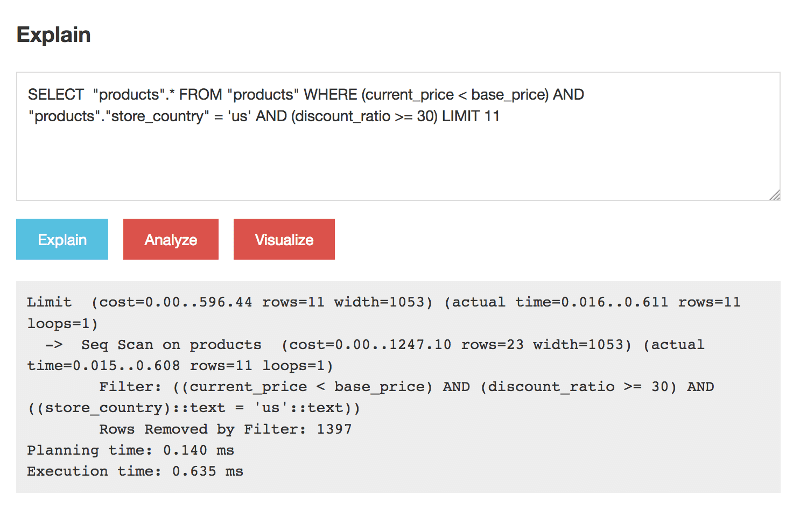

You can hook up PgHero to your production application to gain powerful insights into your database queries performance. One downside is that it does not indicate from which places in the Ruby code the queries originate.

A great feature is that it automatically warns you about slow queries. What’s more, it lets you run EXPLAIN and ANALYZE straight from the interface without having to access the database CLI.

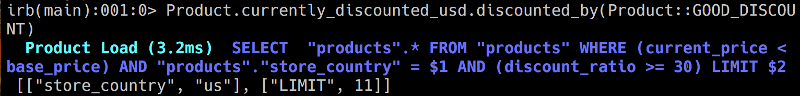

One trick you could use here is to enable showing SQL logs in the Rails console:

ActiveRecord::Base.logger = Logger.new(STDOUT)

and later copy the resulting SQL logs (you need to change $1 etc. into argument values manually) to Pg Hero interface:

Alternatively, you could use to_sql method to translate Active Record query into an underlying SQL. An advantage of automatic logs is that whenever Ruby code executed in the console runs a database query you can see an SQL code without explicitly generating it.

EXPLAIN ANALYZE in Rails

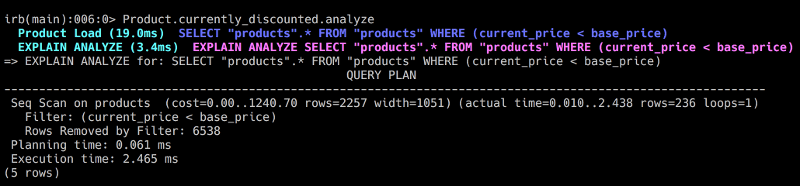

Rails also have a built-in explain method you can run on an AR query object. ANALYZE is better because it performs the actual query and provides insight into real execution time, not hypothetical cost. There is a pull request adding more options to explain method and ANALYZE is one of them.

If you would like to use ANALYZE from Rails console right now, you can check out my new gem: activerecord-analyze. It is a bit rough and experimental but works:

Query visualizer

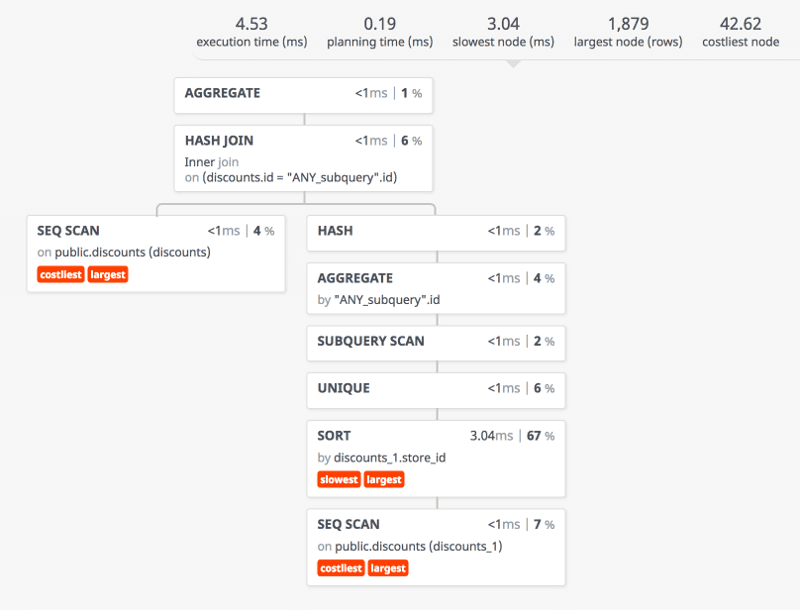

With PgHero you can even go all pro and visualize a query plan using a visualizer tool:

Explaining the EXPLAIN ANALYZE

One thing you need to watch out for in EXPLAIN ANALYZE reports are Seq Scans. Every such occurrence is potentially a big no-no because it means that Postgres needs to scan a table row by row to find matching entries. Such query will get slower with each new record added to the table and could be a serious problem in case of larger tables. You can fix it by adding an appropriate index.

What is “appropriate” might not be obvious at first because the way PostgreSQL handles indexes is quite complex. If you are not sure which index to add the best solution is to experiment with different settings and run EXPLAIN ANALYZE after each change to compare the actual query costs.

Remember that you cannot go all in for indexing every single attribute because every index slows down insert operations. You can also check out my other blog post for a more detailed introduction to using EXPLAIN ANALYZE in PostgreSQL.

Check database queries optimization results

Once you track down and optimize the queries you should check the actual results.

The best approach would be to perform benchmarks on a production database. The problem is that you probably don’t want to DDOS your production website. Also, remote benchmarks might not be reliable because of varying network conditions. In that case, the best solution could be to perform benchmarks on a local computer, with settings resembling a real production site.

Copy production database

You can copy your production database locally. How to do it exactly depends on your setup. If you use PostgreSQL on Heroku you should check out this article.

Seed local database to mimic production

It is highly possible that due to legal and privacy issues you will not be able to download and copy a real production data to work with it locally.

It that case you could use Factory Bot to seed your database to a size approximating a production. If your factories are capable of generating a unique data all you need to do is to run them in a loop in order to seed a database.

Use Siege for local benchmarks

Once you get your database setup ready you can start benchmarking. Remember to run your Rails app in a production environment, because in development many optimizations are disabled.

Siege is a great and simple to use benchmarking tool. It gives you a detailed report on how a specific endpoint performs:

Transactions: 32782 hits

Availability: 100.00 %

Elapsed time: 59.94 secs

Data transferred: 5186.03 MB

Response time: 0.01 secs

Transaction rate: 546.91 trans/sec

Throughput: 86.52 MB/sec

Concurrency: 19.97

Successful transactions: 32782

Failed transactions: 0

Longest transaction: 0.16

Shortest transaction: 0.00Summary

Although database level optimization techniques have its limits they can take you a long way in improving the performance of your application. I hope that techniques I described will help you get more insight about your database queries. Let me know if you know some other tools useful when working with SQL in Rails.

You can check out my other blog posts about optimizing Rails queries:

How to Improve ActiveRecord Query Performance with Subquery Caching

Optimize Rails Performance with Redis Caching and Rack Middleware