PostgreSQL query planner is full of surprises, so a common-sense approach to writing performant queries is sometimes misleading. In this blog post, I’ll describe examples of optimizing seemingly obvious queries with the help of EXPLAIN ANALYZE and Postgres metadata analysis.

All the test queries were performed on PostgreSQL 12 on a table seeded to one million objects. If you’d like to replicate similar behavior with a smaller development dataset, you’ll have to discourage the use of sequential scans by running:

SET enable_seqscan TO off;This tutorial assumes some basic familiarity with reading the EXPLAIN ANALYZE reports. You can check out this blog post for an introduction to the topic.

1. Searching by a function call

It is common to search by values modified using a PostgreSQL function call. Let’s have a look at a query plan for searching a column by its lowercased value:

EXPLAIN (ANALYZE, BUFFERS)

SELECT * FROM users WHERE lower(email) = '[email protected]' ;

-> Parallel Seq Scan on users

Filter: (lower((email)::text) = '[email protected]'::text)

Rows Removed by Filter: 333667

Buffers: shared hit=1248 read=41725

Execution Time: 180.813 msEXPLAIN ANALYZE output has been truncated for brevity.The report indicates that the query planner performs an inefficient Seq Scan and Filter operations to execute a query. Thanks to adding BUFFERS option to our query, we can see that the database had to fetch over 40k data pages using a slow disk read operations, and only ~1k of them were cached in memory.

A query searching by a function cannot use a standard index. So you’d need to add a custom index for it to be efficient. But, adding custom indexes on a per-query basis is not a very scalable approach. You might find yourself with multiple redundant indexes that significantly slow down the write operations.

If upper and lower case letters do not matter, you could run a migration to lowercase all values and make the standard indexes work. However, if you still want to store uppercase characters in your database, you can consider using the CITEXT extension. It creates a case-insensitive column that can be efficiently searched without creating custom indexes.

EXPLAIN (ANALYZE, BUFFERS)

SELECT * FROM users WHERE email = '[email protected]' ;

Index Scan using index_users_on_email on users

Index Cond: (email = '[email protected]'::citext)

Buffers: shared hit=3

Execution Time: 0.128 msThe 180ms execution time of the original query might not have seemed like much. But we’ve just managed to speed it up by orders of magnitude down to below 1ms! The new solution will remain performant regardless of the data size, and the query fetches only three buffer blocks from the in-memory cache. Also, by leveraging extension, we can avoid adding additional indexes.

2. Searching by a pattern

LIKE and ILIKE queries are often used, but it’s not always obvious that the additional setup is necessary to execute them efficiently. Let’s see how a sample query behaves with a standard B-tree index:

EXPLAIN ANALYZE SELECT * FROM users WHERE email LIKE '%@example.com';

-> Parallel Seq Scan on users

Filter: ((email)::text ~~ '%@example.com'::text)

Execution Time: 111.263 msLike before, query planner cannot leverage index and has to resort to inefficient Seq Scan and Filter.

To get this query up to speed, we’ll have to add a custom extension and index type. Run the following commands:

CREATE EXTENSION IF NOT EXISTS btree_gin;

CREATE EXTENSION IF NOT EXISTS pg_trgm;

CREATE INDEX index_users_on_email_gin ON users USING gin (email gin_trgm_ops);Let’s rerun our query:

EXPLAIN ANALYZE SELECT * FROM users WHERE email LIKE '%@example.com';

Bitmap Heap Scan on users

Recheck Cond: ((email)::text ~~ '%@example.com'::text)

-> Bitmap Index Scan on index_users_on_email_gin

Index Cond: ((email)::text ~~ '%@example.com'::text)

Execution Time: 0.206 msAnd now it’s executing below 1ms. Remember that gin indexes are slower to update than the standard ones. So you should avoid adding them to a frequently updated table.

3. Ordering by NULLS LAST

Unless a column is configured as NOT NULL, you have to be careful when using it for sorting. A default ASC order will always return NULL values at the end of the results. But what if you want to sort potentially NULL strings by descending order but keep all the NULLs at the end? An initial approach might be to leverage NULLS LAST custom sorting order. Let’s have a closer look at EXPLAIN ANALYZE outputs that such queries would generate:

EXPLAIN ANALYZE

SELECT * FROM users

ORDER BY email DESC LIMIT 10;

-> Index Scan Backward using index_users_on_email on users

Execution Time: 0.641 msWe can see an Index Scan Backward entry, so our query correctly uses an index, and it was executed almost instantly. However, the results of this query will always start from NULL values. So if we want to move them to the end of the response we could rewrite it like that:

EXPLAIN ANALYZE

SELECT * FROM users

ORDER BY email DESC NULLS LAST LIMIT 10;

-> Sort (cost=41482.85..42525.55 rows=417083 width=41) (actual time=5572.524..5572.589 rows=8 loops=3)

Sort Key: email DESC NULLS LAST

Sort Method: top-N heapsort Memory: 26kB

-> Parallel Seq Scan on users (cost=0.00..32469.83 rows=417083 width=41) (actual time=0.037..2793.011 rows=333667 loops=3)

Execution Time: 5578.725 msBut as you can see, the query now executes for over FIVE SECONDS. Although the email column is indexed, a standard index cannot be used for sorting with NULLS LAST option. Instead, the database has to sort the WHOLE table in memory or fall back to an even slower on-disk sorting. Not only does it kill the performance, but it could also significantly increase the overall memory usage.

You could fix it by adding a custom NULLS LAST index as described in the PostgreSQL docs. But, just like in the case of searching by a function, adding custom indexes on a per-query basis is a bad practice.

A simple way to get the desired result is to write two queries instead. The first one would fetch the sorted non-null values. If the result does not satisfy the LIMIT, another query fetches remaining rows with NULL values.

SELECT *

FROM users ORDER BY email DESC

WHERE email IS NOT NULL LIMIT 10;

SELECT *

FROM users

WHERE email IS NULL LIMIT 10;4. Bloated null_indexes

As we’ve determined in the previous examples, adding a correct index can significantly improve a query execution time. However, overusing indexes can dramatically bloat the size of your database and increase maintenance memory usage. Additionally, indexes have to be updated on each write operation. So limiting their number and scope is usually a good approach.

Your database likely has a few so-called (by me) “NULL indexes”. These are the indexes that contain a high ratio of NULL values.

Depending on business logic, a NULL value might be used for searching, so these indexes are correct. But, usually, you wouldn’t write a query searching for rows containing a specific NULL value. If that is the case, recreating the index to exclude NULLs would reduce the disk usage and limit how often it has to be updated.

You can run the following commands to drop and rebuild an index to only include NOT NULL rows:

DROP INDEX CONCURRENTLY users_reset_token_ix;

CREATE INDEX CONCURRENTLY users_reset_token_ix ON users(reset_token)

WHERE reset_token IS NOT NULL;It’s worth noting that this index can still be used by queries explicitly searching for all NOT NULL values.

You can check out a PG Extras null_indexes method (or execute its raw SQL source code) to see if your database has many indexes that could be trimmed down and what are the expected disk space savings:

index | index_size | unique | indexed_column | null_frac | expected_saving

--------------------+------------+--------+----------------+-----------+-----------------

users_reset_token | 1445 MB | t | reset_token | 97.00% | 1401 MB

plan_cancelled_at | 539 MB | f | cancelled_at | 8.30% | 44 MB

users_email | 18 MB | t | email | 28.67% | 5160 kBnull_indexes method.You can read more about optimizing PostgreSQL performance with PG Extras in this blog post.

5. Update transaction scope

A usually recommended practice is to keep the number of database commits to a minimum. It means that wrapping multiple update queries into a single transaction should improve the write performance.

For many common scenarios, this is an optimal strategy. But, using a single transaction for a massive data update could cause a problem with so-called locks. So let’s see what’s the impact of updating over 100k rows in a single transaction:

UPDATE messages SET status = 'archived';While the transaction is still pending, you can investigate what locks it generated by using the PG Extras locks method (or executing its raw SQL source code).

You might not have a large enough dataset to manually execute the locks SQL while the update transaction is still running. In that case, you can fake slow execution time inside a single transaction like that:

BEGIN;

UPDATE messages SET status = 'archived';

SELECT pg_sleep(15);

COMMIT;Now, running the locks SQL should return similar output:

relname | mode | query_snippet

-------------------------------------------------------------------------------

messages | RowExclusiveLock | UPDATE "messages" SET "status" = $1

index_messages_on_status | RowExclusiveLock | UPDATE "messages" SET "status" = $1

index_messages_on_text | RowExclusiveLock | UPDATE "messages" SET "status" = $1

index_messages_on_time | RowExclusiveLock | UPDATE "messages" SET "status" = $1

...You can see that the update operation acquires a RowExclusiveLock on the table rows and locks corresponding indexes. It means that any other process that tries to update the same rows during the long single-transaction update process has to wait for it to complete. As a result, a large-scale update performed by a background worker process could potentially timeout web server processes and cause a user-facing app outage.

To avoid this issue, you could add batching to the update operation, using the similar SQL:

UPDATE messages SET status = 'archived'

WHERE id IN

(SELECT ID FROM messages ORDER BY ID LIMIT 10000 OFFSET 0);

UPDATE messages SET status = 'archived'

WHERE id IN

(SELECT ID FROM messages ORDER BY ID LIMIT 10000 OFFSET 10000);

UPDATE messages SET status = 'archived'

WHERE id IN

(SELECT ID FROM messages ORDER BY ID LIMIT 10000 OFFSET 20000);

...The above example updates rows in batches of 10k at a time. The whole operation will likely take longer than doing it inside a single transaction. But, each update step will be quick to commit database changes, so other processes would not get stuck.

If you suspect that your app’s performance is degraded by locking transactions, you can use a combination of locks and blocking PG Extras methods to monitor for long-lasting table locks.

Summary

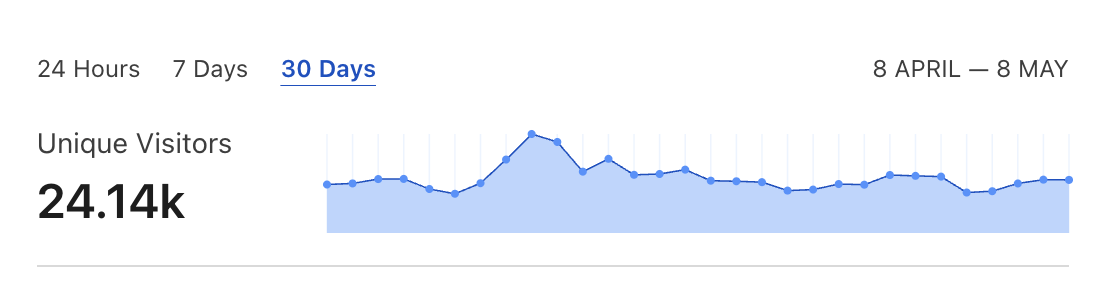

The challenge of optimizing PostgreSQL is that most issues start emerging only with a large enough dataset and traffic. While creating a new feature with a small development database, you’re not likely to discover potential bottlenecks. That’s why it’s essential to monitor the production performance and regularly dive into the EXPLAIN ANALYZE output to keep things running at an optimal speed.