Rails performance audits and tuning have been my main occupation and source of income for over four years now. In this blog post, I’ll share a few secrets of my trade. Read on if you want to learn how I approach optimizing an unknown codebase, what tools I use, and which fixes are usually most impactful. You can treat this post as a generalized roadmap for your DIY performance audit with multiple links to more in-depth resources.

Layers of performance optimization

My audits usually span a few weeks. That’s not a lot of time time to get familiar with an unknown and often legacy codebase. I’ve established a framework for analyzing different layers of a Rails application. It allows me to propose and implement viable fixes in a limited time.

Let’s discuss the areas on which I focus. They are kind of ordered by the return of investment that working on them might have on the project’s performance. But, every case is different, so please treat it as a rough approximation.

1. Frontend and HTTP layer

Customers usually approach me with a request to optimize the backend, i.e., speed up the bottleneck API endpoint or tune SQL queries. During the initial research, I often discover that tweaking the HTTP layer will better impact the perceivable performance than fine-tuning the backend.

I think developers often underestimate the impact of frontend performance optimization because it doesn’t directly affect the infrastructure costs. Rendering the unoptimized website is offloaded to the visitor’s desktop or mobile device and cannot be measured using backend monitoring tools. Rendering a website consists of dozens of requests. Usually, only a few of them are backend/API related. The majority of requests are static assets, JavaScript libraries, images.

Your customers probably won’t notice if you shave 150ms off your bottleneck SQL query. Optimizing the backend is more about the project’s scalability than your customers’ browsing experience. But, adding correct HTTP caching headers or configuring HTTP2 to enable multiplexing could speed up your page Time to Interactive metric from 5s to 2s. And your clients will love it!

Protip: a quick & easy way to get most of the HTTP layer config in order is to proxy your app’s traffic via Cloudflare. You can check out my other blog post for more detailed tips on frontend performance optimization.

2. Redundant SQL queries

From my experience, N+1 queries are the top performance killer for Rails applications. It’s not uncommon to see a single request generating hundreds of SQL queries because of a missing eager loading.

ActiveRecord makes it just too easy to sneak in a N+1 bug in production. It’s probable to miss it in development because you’re usually working with small datasets.

I’ve seen drastic response time improvements just from adding a single includes method call. If you’re starting your performance audit, eliminating N+1 queries from your most busy endpoints is probably the most effective first step.

You can read more about fixing N+1 issues in Rails apps here.

3. Unoptimized SQL queries

After tackling the N+1 queries, the next step of an audit is usually a more detailed PostgreSQL metadata analysis. I use my rails-pg-extras library to deep dive into what’s going on at the database layer.

pg-extras offers a set of helper queries that allow you to analyze the database. I describe the detailed step-by-step process of using the pg-extras library for optimizing PostgreSQL performance here. But, a good starting point is to use outliers and long_running_queries methods to list slow queries that take up a considerable amount of database resources.

Bottleneck SQL queries that are challenging to optimize could be perfect candidates for caching. You can read more about this technique in my other blog post.

If you’re on Rails 7.0 and above, you can leverage the new load_async ActiveRecord API to parallelize bottleneck queries. But, it’s not a silver bullet and introduces a potential stability risk. Check out my article for a detailed guide to load_async.

4. Database layer configuration

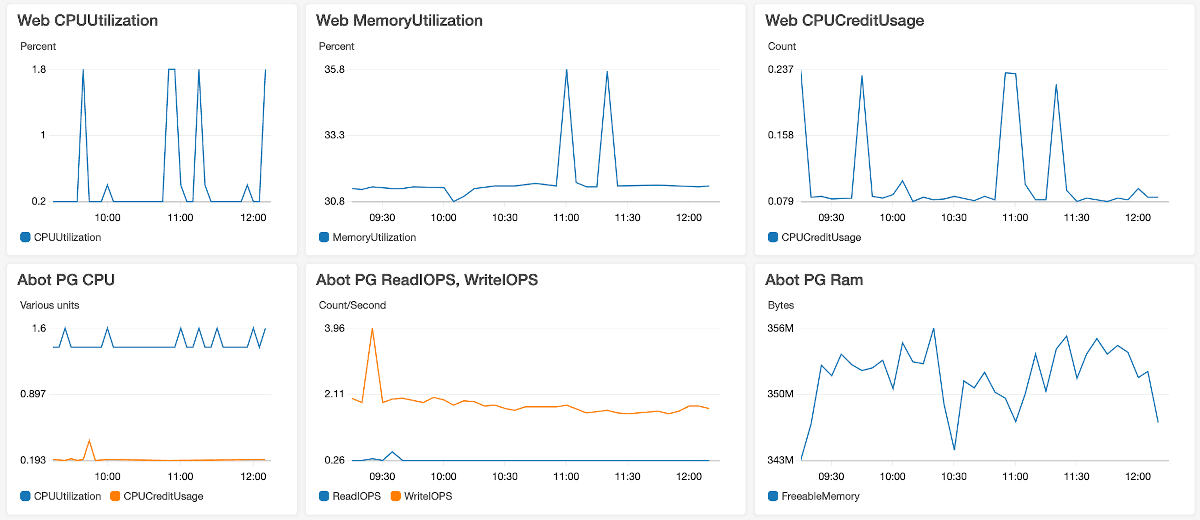

This part of an audit is also related to the database, but rather than focusing on individual queries, we analyze the general configuration and resources usage. The first step is to determine if your current database engine is not overutilized. It’s ideal if the project uses AWS RDS as its database provider because it offers powerful insights into the usage metrics.

If the project is using a Heroku PostgreSQL addon, I usually recommend migrating a database to AWS RDS. I’ve written a detailed blog post about why it’s often the best solution.

Heroku offers very limited insights into what’s going inside the database engine, but rails-pg-extras cache_hit method is a decent way to check if your DB server size is adequate.

For projects experiencing higher loads at the database layer, tweaking the PostgreSQL global settings can bring measurable benefits. PGTune and POSTGRESQLCO.NF are your friends here.

I dive into more details on the setup of the PostgreSQL database on RDS in my eBook.

5. Server configuration

I’ve seen projects underutilizing their resources and, in turn, burning money because of misconfigured servers.

Most Rails apps’ performance is IO-bound because of the SQL queries and internal HTTP calls. That is a perfect case for leveraging concurrency in Ruby. That’s why I always recommend using Puma server because of its multithreading capabilities. You can check out this blog post for more info about concurrency in Ruby and how it relates to blocking IO.

For some projects, switching to Puma is not straightforward because thread safety must be taken into account. But writing non-thread-safe code is a ticking time bomb, so fixing it should be considered anyway. You can check out this article for an intro to thread-safety in Rails.

You can check out the following Heroku docs for general tips on configuring Puma workers, and threads count.

Bonus tip: if you’re using AWS EC2, make sure always to provision the newest generation instance types, i.e., m5.large instead of m4.large. They always have better speed characteristics and lower costs. You can use this website to calculate your potential savings.

Summary

Every performance audit that I’ve conducted had its unique challenges. But, the tips I’ve described should apply to most Ruby on Rails projects. I highly encourage you to take a closer look at your app’s performance. A small development time investment can often significantly impact your customers’ UX, project’s scalability, and infrastructure cost.